Welcome to the Linkurious Enterprise administrator documentation. This documentation will help you install, run and customize Linkurious Enterprise.

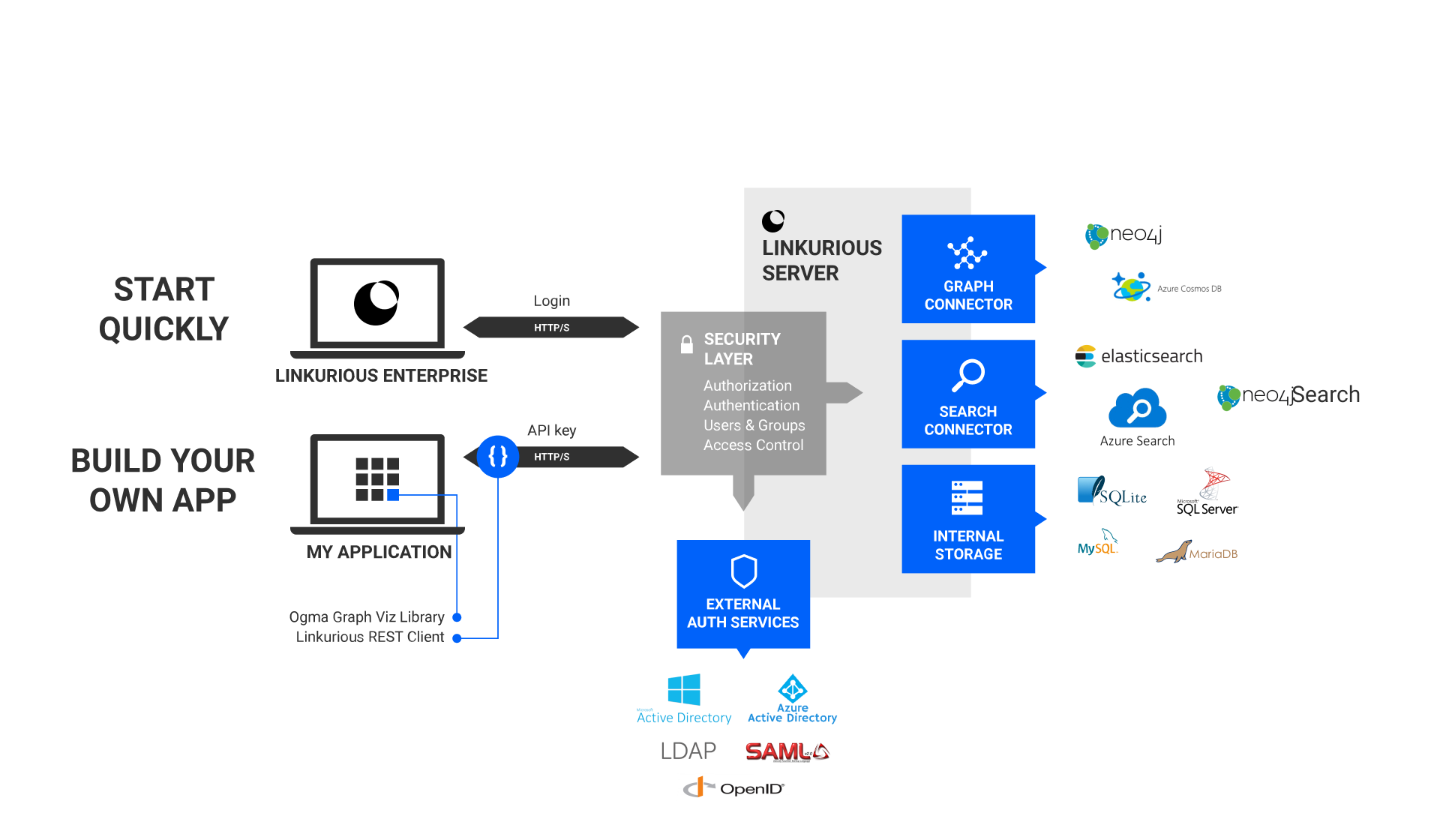

Linkurious Enterprise is a three-tier application.

The presentation layer is a Web application. It uses our graph visualization library, Ogma, to allow rich interactions with the graph. It also provides a user interface to enable data administration and collaboration among end users.

The presentation layer communicates with the logic layer via a JSON-based REST API. Custom presentation layer application can be developed on top of the logic layer.

The logic layer is a NodeJS-based server. It provides a unified REST API to read, write and search into graph databases from multiple vendors (Neo4j and Cosmos DB). It implements also a security layer with modular authentication that enables role-based access control policies. It can be connected to multiple graph databases at the same time and offers high-level APIs for collaborative exploration of graphs: users can create, share and publish graph visualizations, and multiple users can edit graph data.

Administrators can control it from its REST API for easy automation and deployment.

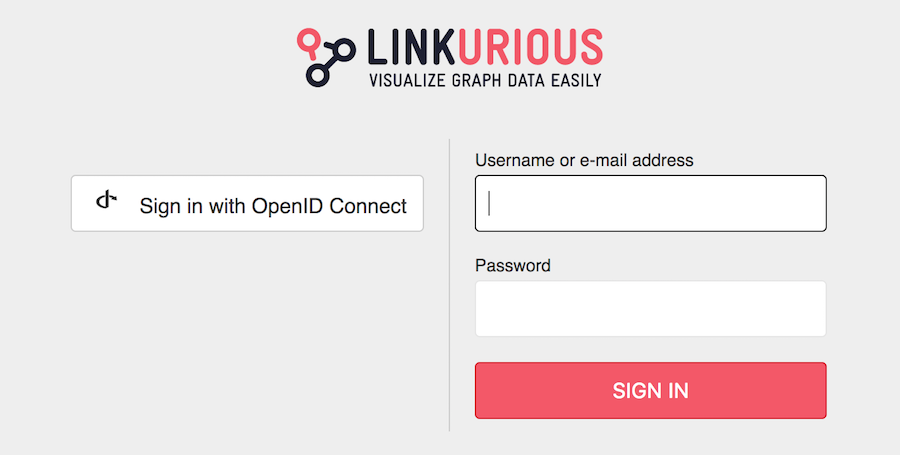

Multiple external authentication providers are supported (LDAP, Microsoft Active Directory, Microsoft Azure Active Directory, Google Suite, OpenID Connect, SAML2 / ADFS).

The data layer supports several graph databases, as well as indexation engines.

Consult with your vendor to make sure that your graph database is installed on appropriate hardware and configured for better performances:

Make sure that your graph database is secure:

Keep in mind that Linkurious Enterprise can be used without Elasticsearch, see search options.

If you are using Linkurious Enterprise with Elasticsearch

By default, SQLite is used for the user-data store. SQLite is not recommended for production environment: switch to MySQL/MariaDB/MSSQL instead.

Schedule regular backups of the user-data store:

Make sure your user-data-store database is secure

If you need high-availability, set up replication

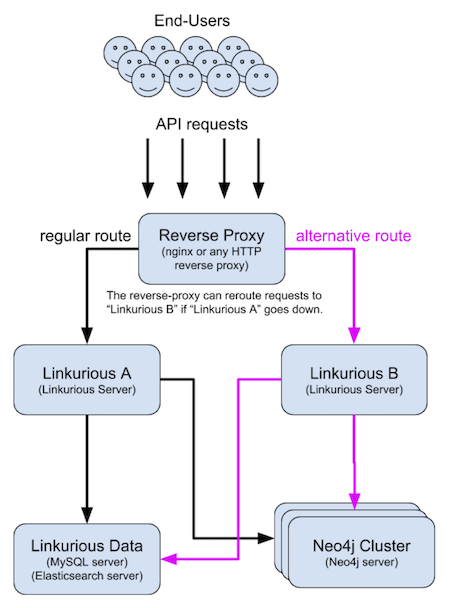

linkurious/data directoryforceHttps: true in Web Server configuration)Linkurious Enterprise can be set up with a backup instance to allow for continuity of service when the main server crashes.

For this setup:

A reverse proxy is then configured to send requests to the backup server when the main server is down. If you are using nginx, this sample configuration can be used:

http { # define the "backend" upstream upstream backend { # main server server linkurious-main.example.com; # backup server server linkurious-backup.example.com backup; } # redirect all queries to the "backend" upsteam server { location / { proxy_pass http://backend; } }}See nginx documentation for more details.

The user-data store database (containing visualizations, saved queries, user, groups, etc) is stored in a SQL database.

By default, this database is an SQLite database (located at linkurious/data/database.sqlite).

In production, the use of a MySQL/MariaDB/MSSQL database is recommended.

These databases can be located on a remote server.

The default user-data store (SQLite) is not encrypted.

Encryption is available with the following vendors:

Yes, when using an external user-data store (e.g. MariaDB, MySQL or MSSQL), the SQLite files can be deleted.

The configuration file contains all configurable options, as well as the configuration options of all configured data sources (e.g. User-Data Store host/port/username/encrypted password; Graph Database URL/username/encrypted password; Index Search URL/username/encrypted password, etc). All passwords/secrets in the configuration file are encrypted before storage.

The configuration file, like the rest of the data folder, should be considered private and not be readable by anyone other than the Linkurious Enterprise service account.

All application secrets stored by Linkurious Enterprise (Graph Database credentials, User-Data Store credentials, Index Search credentials, SSL certificate passphrase, etc.) are encrypted using the AES-256-CTR algorithm.

User passwords are strongly hashed before being stored in the database. Passwords for LDAP and other external authentication solutions are not stored at all.

The audit trail files are generated in linkurious/data/audit-trail by default.

This path can be set in the audit trail configuration.

The audit trail contains sensitive information and should be secured. It should be owned and readable only by the Linkurious Enterprise service account.

The data directory contains logs, configuration files, and, if enabled, audit trails. This information is sensitive, and the directory should be owned and readable only by the Linkurious Enterprise service account

A service account is an operating system user account with restricted privileges that is used only to run a specific service and own it data related to this service. Service accounts are not intended to be used by people, except for performing administrative operations. Access to service accounts is usually tightly controlled using privileged access management solutions.

Service accounts prevent other users and services from reading or writing to sensitive files in the directories that they own, and are themselves prevented from reading and writing to other parts of the file system where they are not owners.

We do not support Kerberos as of now (but we support many other third-party authentication services).

Linkurious Enterprise creates three types of logs:

data/logs/analytics.log): Usage telemetry (GDPR safe, not sensitive information)data/logs/linkurious.log and linkurious.exceptions.log): Server debugging logs (may contain graph queries).data/audit-trail/audit-trail.log): See audit trail log format details.If your LDAP server supports secure LDAP, use the "ldaps://" protocol in your LDAP configuration.

If you need authentication and transport layer security for Elasticsearch:

To customize supported TLS ciphers, in the general configuration, set tlsCipherList in the server section.

Here is an example, based on Mozilla's recommended cipher list:

"tlsCipherList": "TLS_AES_128_GCM_SHA256:TLS_AES_256_GCM_SHA384:TLS_CHACHA20_POLY1305_SHA256:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384:!eNULL:!aNULL"We are trying to keep the Linkurious Enterprise update process as simple as possible. However, sometimes configuration can require specific manual activities.

Before you upgrade to a new version of Linkurious Enterprise, consider browsing our public resources to verify whether there are specific instructions that applies to your configuration or activities you are planning.

PEM (for Privacy-Enhanced Mail) is a file format for storing and sending cryptographic keys and certificates.

To verify if a certificate is PEM-encoded, open it with a text-editor, it should look something like this:

-----BEGIN CERTIFICATE-----MIICLDCCAdKgAwIBAgIBADAKBggqhkjOPQQDAjB9MQswCQYDVQQGEwJCRTEPMA0GA1UEChMGR251VExTMSUwIwYDVQQLExxHbnVUTFMgY2VydGlmaWNhdGUgYXV0aG9yDwEB/wQFAwMHBgAwHQYDVR0OBBYEFPC0gf6YEr+1KLlkQAPLzB9mTigDMAoGCCqGSM49BAMCA0gAMEUCIDGuwD1KPyG+hRf88MeyMQcqOFZD0TbVleF+UsAGQ4enAiEAl4wOuDwKQa+upc8GftXE2C//4mKANBC6It01gUaTIpo=-----END CERTIFICATE-----If you have a DER-encoded certificate (binary), it can be converted to PEM:

openssl x509 -inform der -in certificate.cer -out certificate.pemCrypto Shell Extensions)details tabCopy to file...NextBase-64 encoded X.509 (.CER) and click NextNextFinishMost graph vendors support search strategies other than Elasticsearch. See details on our search options page.

Yes. See the geospatial configuration options for further details.

Yes, you can configure ArcGIS as the tile-server for geo-spatial mode. The ArcGIS documentation describes the API endpoints that is compatible with Linkurious Enterprise:

http://<MapServer-url>/tile/{z}/{y}/{x}For example:

./linkurious/start.sh: start the server./linkurious/stop.sh: stop the server./linkurious/menu.sh: open the management console./linkurious/menu.sh status: print the current status of the server./linkurious/menu.sh install: install as a system-wide service (requires root)./linkurious/menu.sh uninstall: remove from system-wide services (requires root)./linkurious/menu.sh help: show advanced optionsDepending on the configuration options specified, enabling the audit trail can have an impact on performance. See the audit trail documentation for details.

Linkurious Enterprise is a Web-application server. It needs to be installed on a server and can then be accessed by multiple users using their Web browser.

Technical requirements for the machine used to install the Linkurious Enterprise Web-application server:

Linkurious Enterprise hardware requirements change according to your needs and setup. Here are some scenarios with their suggested minimum hardware configurations.

Project up to 20 users and few alerts.

Using the embedded Elasticsearch1:

Not using the embedded Elasticsearch:

Project up to 100 users and tenth of alerts.

Using the embedded Elasticsearch1:

Not using the embedded Elasticsearch:

Project with more than 100 users and several alerts.

To maintain stable performance, it is necessary to move heavily loaded components to well-dimensioned dedicated servers/clusters:

Hardware requirements only for the Linkurious Enterprise server:

Linkurious Enterprise requires a 64-bit system to run.

1The embedded Elasticsearch is not recommended when dealing with large amounts of data, see Elasticsearch documentation.

2Some extra space is required for the Elasticsearch full-text index. This space is proportional to the size of your graph database. A (very) rough estimate could be 50% of your graph database (it also depends on the actual data density).

3It is possible to configure Elasticsearch for higher memory usage, please contact us at support@linkurio.us.

4It is possible to configure Linkurious Enterprise for higher memory usage more, please contact us at support@linkurio.us.

Please keep in mind that these technical requirements are for Linkurious Enterprise server only. For hardware requirements regarding your graph database, please refer to these guides:

Linkurious Enterprise includes an embedded Elasticsearch instance for search capabilities. Please keep in mind that this embedded instance will only work for smaller graphs (less than 50M nodes + edges). For larger graphs, you will need to deploy an Elasticsearch cluster. Please refer to Elasticsearch's hardware requirements guide for details.

Linkurious Enterprise server can be deployed on the following platforms:

Linkurious Enterprise depends on Node.js that requires a Linux kernel >= 3.10 and a GLIBC >= 2.17.

You can check the Linux kernel and the GLIBC version available on your system on http://distrowatch.com.

Technical requirements for users that access Linkurious Enterprise with their Web browser:

Hardware requirements of the Linkurious Enterprise Web client vary with the size of the visualized graphs. For up to 500 nodes and edges in a single visualization, we recommend to use a machine with 4 GB RAM, and 2 CPU cores @ 1.6 Ghz.

End-users will access Linkurious Enterprise through a Web browser. The following browsers are officially supported:

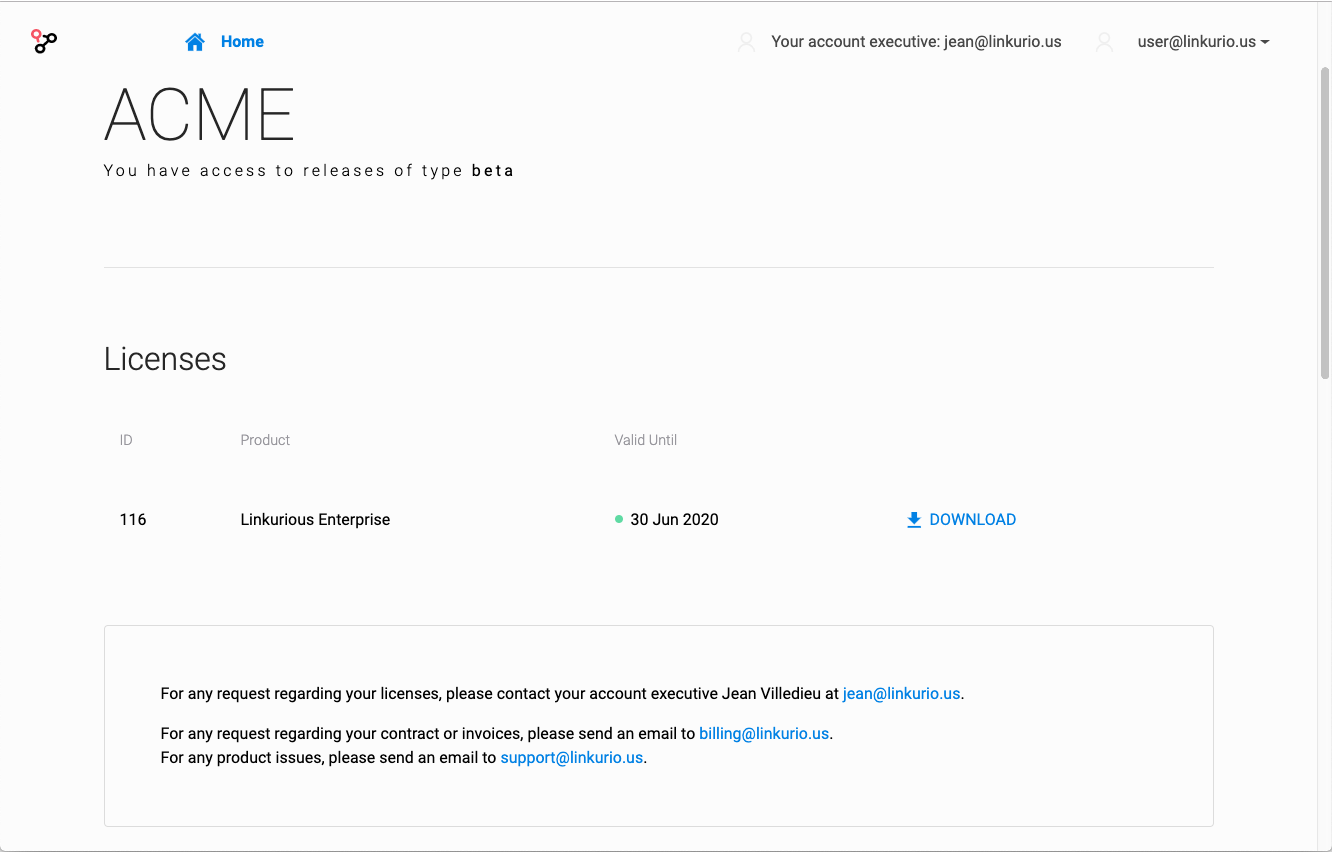

The latest version of Linkurious Enterprise can be downloaded from https://get.linkurio.us/.

Log in with the username and password created during the purchase process and then go to the download section for the specific license (in case of multiple one), it will be possible to download the package for the correct platform.

The ZIP file contains:

Please see the Linkurious Enterprise version compatibility matrix and our documentation on how to update Linkurious Enterprise.

To work properly, Linkurious Enterprise only need permissions (including write access) on the whole application directory, no administrative rights are needed.

The only exception may be related to Operating Systems' security policies preventing any standard user to bind applications on the first 1024 port numbers, see web server configuration to learn more on the issue and how prevent to grant administrative rights.

As best practice, it is advised to create a dedicated service account (e.g. linkurious)

with the minimum level of permissions.

> unzip linkurious-linux-v3.0.14.zip> cd linkurious-linuxlinkurious-linux/data/config/production.json (see how to configure a data-source)See how to start Linkurious Enterprise on Linux.

linkurious-windows folderlinkurious-windows/data/config/production.json (see how to configure a data-source)See how to start Linkurious Enterprise on Windows.

> unzip linkurious-osx-v3.0.14.zip> cd linkurious-osxlinkurious-osx/data/config/production.json (see how to configure a data-source)See how to start Linkurious Enterprise on Mac OS.

> docker load -i linkurious-docker-v3.0.14.tar.gzThe Linkurious Enterprise docker image exposes the ports 3000 and 3443 for http and https connections respectively.

These ports should be mapped on the host machine to allow user connections.

Please visit the docker documentation to learn how publish the ports of a container.

Even if not strictly necessary, the best practice is to define external named volumes to store application data outside the container.

The Linkurious Enterprise docker image doesn't declare any volume, however below folders should be maintained when upgrading Linkurious Enterprise and therefore should be mapped to external volumes:

/data stores Linkurious Enterprise configuration, logs and application data./elasticsearch stores the Embedded Elasticsearch data.Please visit the docker documentation to learn how the configure volumes.

Here is an example to create named volumes (an arbitrary name can be chosen):

docker volume create lke-datadocker volume create lke-elasticsearchSee how to start Linkurious Enterprise with docker.

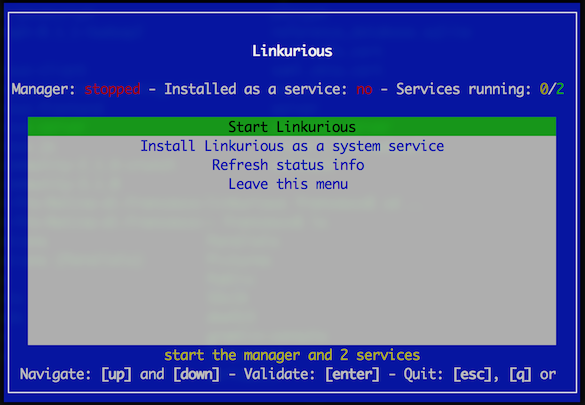

In order to run Linkurious Enterprise automatically when the operating system starts, it is possible to install it as a system service on Linux, Mac OS and Windows.

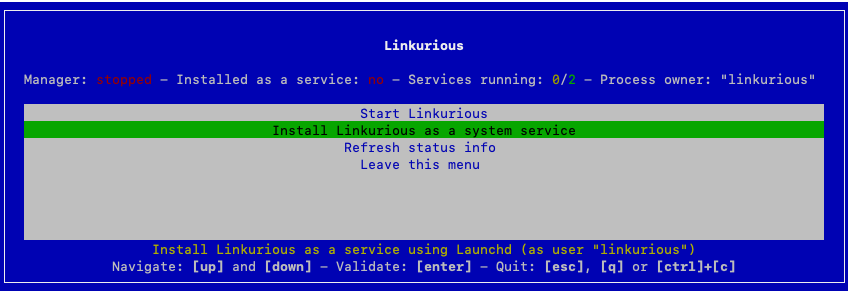

Open the administration menu by running menu.sh, menu.bat or menu.sh.command in the Linkurious Enterprise folder.

Click on Install Linkurious as a system service (administrative rights may be needed to successfully complete the task).

Linkurious Enterprise automatically detects the owner of the folder and will use that user as the

Process owner.It is possible to use a different user by running the

menuscript with the option--user=USER(whereUSERis the desiredProcess ownerwith adequate permissions).

When Linkurious Enterprise is installed as a service, the administration menu (by running menu.sh,

menu.bat or menu.sh.command in the Linkurious Enterprise folder) will show the current status

of the service as well as a new entry to Uninstall Linkurious from system services.

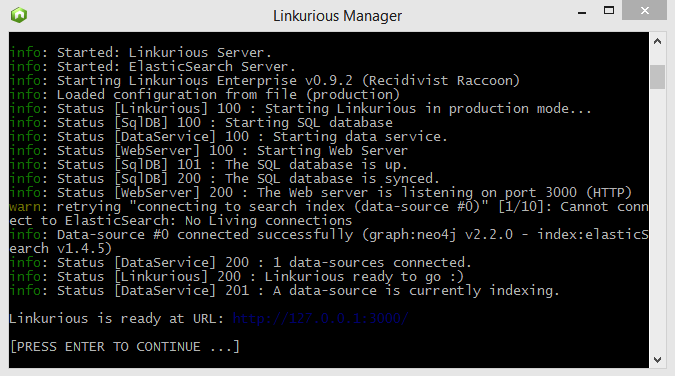

To start Linkurious Enterprise, run the start.sh script in the linkurious-linux directory.

Alternatively, run the menu.sh script and click Start Linkurious.

By default, Linkurious Enterprise server will listen for connection on port 3000.

However, some firewalls block network traffic ports other than 80 (HTTP).

See the Web server configuration documentation to learn how to make Linkurious Enterprise listen on port 80.

To start Linkurious Enterprise, run the start.bat script in the linkurious-windows directory.

Alternatively, run the menu.bat script and click Start Linkurious.

The firewall of Windows might ask you to authorize connections to Linkurious Enterprise. If so, click on Authorize access.

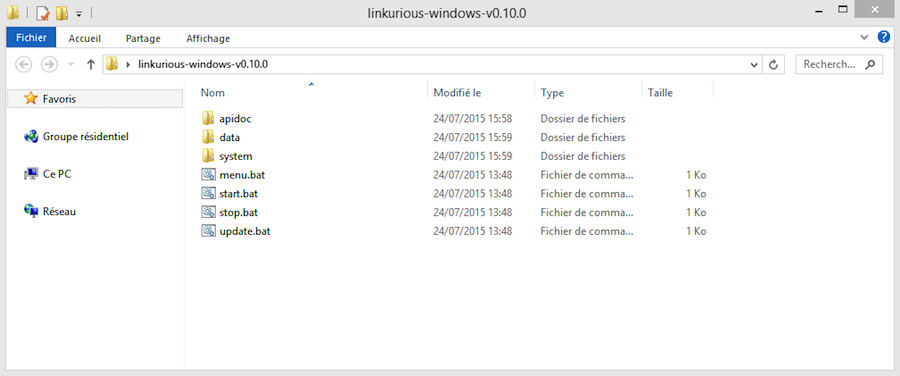

Content of the linkurious-windows directory:

Linkurious Enterprise starting up on Windows:

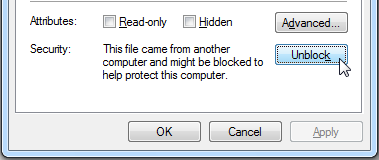

Mac OS prevents you from running applications downloaded from the internet.

To solve this problem, please run the following command before starting Linkurious Enterprise.

This will remove the attributes used by the operating system to identify Linkurious Enterprise files as untrusted.

xattr -rc <Linkurious_home_directory>To start Linkurious Enterprise, run the start.sh.command script in the linkurious-osx directory.

Alternatively, run the menu.sh.command script and click Start Linkurious.

To start a Linkurious Enterprise docker image, please use the docker run command.

Here is an example:

docker run -d --rm \ -p 3000:3000 \ --mount type=volume,src=lke-data,dst=/data \ --mount type=volume,src=lke-elasticsearch,dst=/elasticsearch \ linkurious:3.0.14If you choose to mount a host machine folder as a volume please make sure that the user within the container has read and write access to the volume folders. By default Linkurious Enterprise runs ath the linkurious user (uid: 2013). You can do that by adding a

--useroption to the docker run command. The folders that you want to mount must exist before starting Docker, otherwise Linkurious Enterprise will fail to start due to permissions errors. Please read the docker documentation to learn more.

Here is an example:

docker run -d --rm \ -p 3000:3000 \ --mount type=bind,src=/path/to/my/data/folder,dst=/data \ --mount type=bind,src=/path/to/my/elasticsearch/folder,dst=/elasticsearch \ --user "$(id -u):$(id -g)" \ linkurious:3.0.14If no user is set, the Linkurious Enterprise container will check for appropriate file permissions and change file permissions if necessary.

By default, Linkurious Enterprise runs as the user linkurious who only exists in the container, not on the host. As a consequence, is is not trivial to to set up mount folders on the host which this new user has write permissions for. To make this process easier, file permissions will be set automatically if you run the container as root, and a helpful error message will be printed if file permissions cannot be fixed automatically.

If you are setting memory limits on the running container, using the argument --memory 1024m you will possibly need to adapt the quantity of memory used by the Linkurious Enterprise instance.

When setting '--max-old-space-size' please take into account the full NodeJs process memory, subtracting roughly 50m to the allocated memory of the container.

Considering you are not using the embedded Elasticsearch instance:

docker run -d --rm \ -p 3000:3000 \ --mount type=bind,src=/path/to/my/data/folder,dst=/data \ --memory 1024m \ -e NODE_OPTIONS='--max-old-space-size=984' \ linkurious:3.0.14We do not recommended using embedded ES in a memory limited container.

You may also pass in environment variables that can be expanded in the configuration ## Kubernetes

Please read the previous section on starting a Linkurious Enterprise instance using docker, and the section on fault tolerance.

A simple way to test out Linkurious Enterprise using Kubernetes is to create a simple deployment, using only one replica, and allocate a PersistentVolume for both of the volumes (lke-data, lke-elasticsearch) described above.

In production however you would want to follow the fault tolerance guide and use a StatefulSet, with a main/failover strategy, and the appropriate strategy configured for your load-balancer or ingress.

Run the stop.sh script in the linkurious-linux directory.

Alternately, run menu.sh and click Stop Linkurious.

Run the stop.bat script in the linkurious-windows directory.

Alternately, run menu.bat and click Stop Linkurious.

Run the stop.sh.command script in the linkurious-osx directory.

Alternately, run menu.sh.command and click Stop Linkurious.

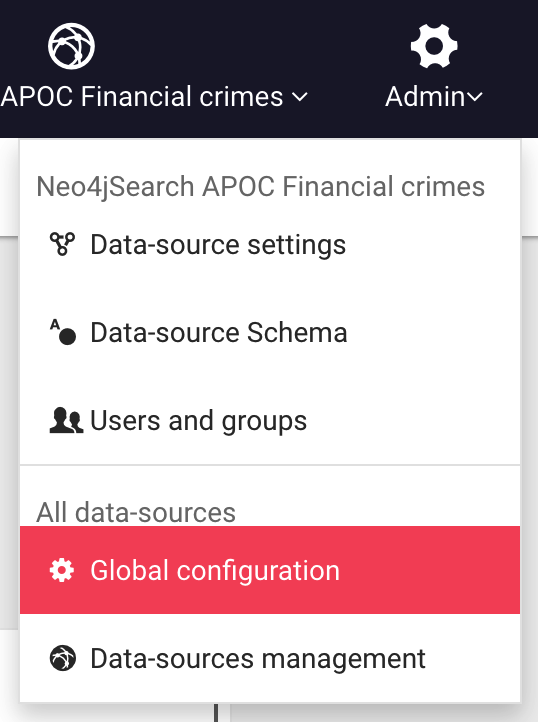

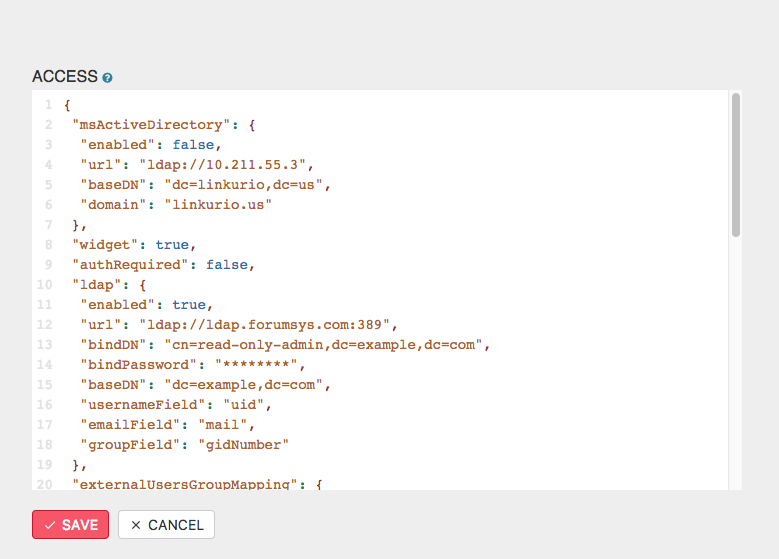

To edit the Linkurious Enterprise configuration, you can either edit the configuration file located at linkurious/data/config/production.json

or use the Web user-interface:

Using an administrator account, access the Admin > Configuration menu to edit the Linkurious Enterprise configuration:

Some configuration change requires a restart to be applied. Linkurious Enterprise will notify you about it and offer you to restart from the Web UI only if you made the changes from the Web UI itself. If you modified the production.json file manually, changes won't get applied immediately and you will need to restart Linkurious Enterprise.

Configuration keys are divided by category.

Password fields will always be hidden from the Web UI, but they can be edited.

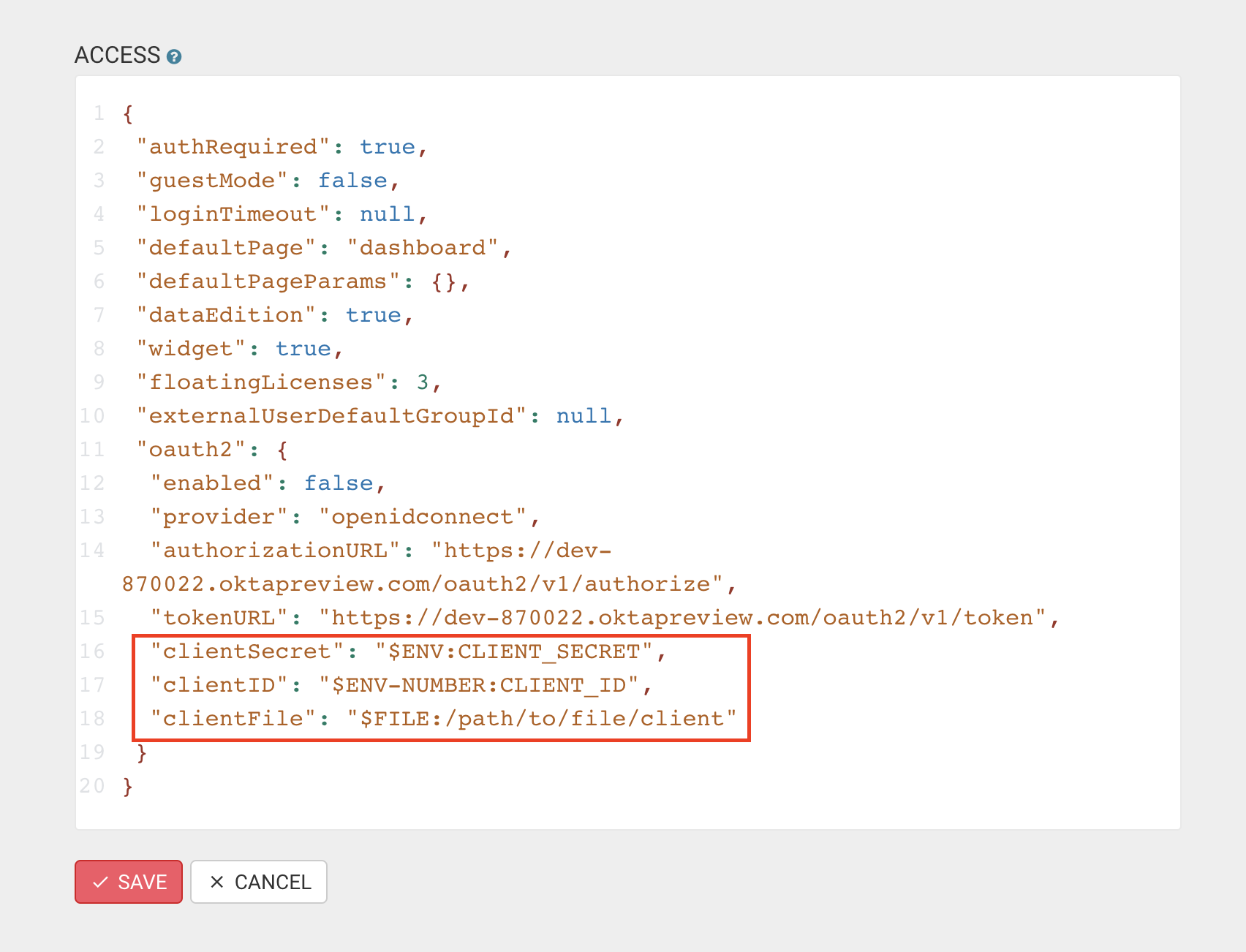

You can also pass variables to the configuration, that will in turn expand to their appropriate value from environment variables or files. For example using : "$ENV:NEO4J_PASSWORD" in the configuration will expand to the value of the environment variable "NEO4j_PASSWORD". Expandable variables are:

-"$ENV:VAR1": replaced with the value of the process' environment variable called "VAR1".

-"$ENV-NUMBER:VAR2": replaced with the value of the process' environment variable called "VAR2" parsed as a number.

-"$ENV-JSON:VAR3": replaced with the value of the process' environment variable called "VAR3" parsed as JSON.

-"$FILE:/path/to/file": replaced with the content of the file at "/path/to/file" parsed as a utf-8 string.

In the configuration object, you can use the following syntax:

When you are finished changing, click Save.

Limitation: There are some limitations with the "$ENV-JSON" expansion,

ENV-JSON breaks if used at the root level or at the first level of the configuration (for example for the whole "server.*" configuration key.)

ENV-JSON breaks if used at any level within the "dataSource.*" configuration key.

Release Date: 2022-08-04

| Feature \ Vendor | Neo4j | Cosmos DB |

|---|---|---|

| Full-text search | ✅ | ✅ |

| Graph styles customization | ✅ | ✅ |

| Graph filtering | ✅ | ✅ |

| Graph editing | ✅ | ✅ |

| Access rights management | ✅ | ✅ |

| Custom graph queries | ✅ | ✅ |

| Custom query templates | ✅ | ✅ |

| Alerts | ✅ | ❌ |

| Shortest path analysis | ✅ | ❌ |

Linkurious Enterprise starts 3 separate processes when launched:

node (or node.exe): The internal process managernode (or node.exe): The Linkurious Enterprise Server processjava (or java.exe): The embedded Elasticsearch indexation server (if enabled).Check if these processes are alive by opening the menu from the Linkurious Enterprise directory (see how to open it on each operating system below):

Run menu.sh. Alternately, run menu.sh status.

Run menu.bat. Alternately, run menu.bat status.

Run menu.sh.command. Alternately, run menu.sh.command status.

The status of the API can be retrieved using a browser or a command line HTTP client like cURL.

To retrieve the API status, send a GET request to http://127.0.0.1:3000/api/status

(replace 127.0.0.1 and 3000 with the actual host and port of your server).

// example response "status": "code": 200 "name": "initialized" "message": "Linkurious ready to go :)" "uptime": 8633 To retrieve the API status, send a GET request to http://127.0.0.1:3000/api/version

(replace 127.0.0.1 and 3000 with the actual host and port of your server).

// example response "tag_name": "3.0.14" "name": "Brilliant Burrito" "prerelease": false "enterprise": trueThe logs of the application are located in linkurious/data/manager/logs folder:

linkurious-server.log: Linkurious Enterprise server outputembedded-elasticsearch.log: embedded Elasticsearch outputFor a structured version of the Linkurious Enterprise server logs in JSONL format, see the linkurious/data/logs folder:

linkurious.log: Linkurious Enterprise server general log, with timestampslinkurious.exceptions.log: Linkurious Enterprise server exceptions log, with timestampsIf you need to get support regarding an issue or you have a question, please contact us at support@linkurio.us.

If the issue is technical, send us the application logs as described below.

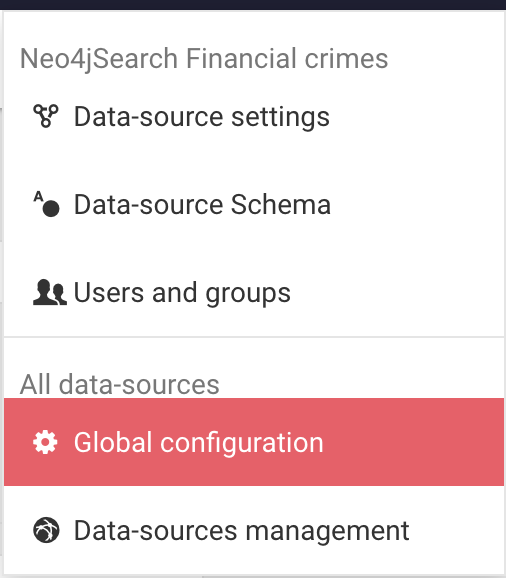

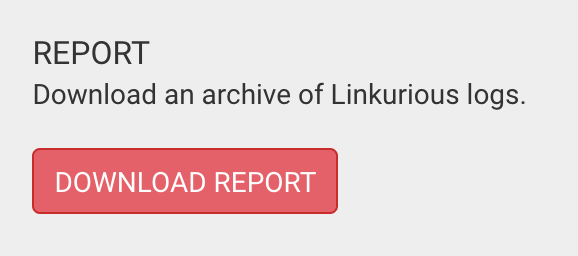

You can download an automatically generated report from the Web user interface via the Admin > Global configuration menu:

At the end of the page, click Download Report:

It can happen that the system fails to start due an error. Since the impossibility to download the automatically generated report, the following files should be added manually to a compressed archive:

The application logs: the manager and log folders stored in <linkurious-installation>/data

The application configuration (optional): the production.json file stored in <linkurious-installation>/data/config

Depending on your situation, the configuration file (

production.json) may sometimes contains clear-text passwords. Consider redacting such password before sharing your configuration with the support team.

To install the license, a license.key file needs to be added to the /data folder of Linkurious Enterprise.

In the case of a Linux, Windows or Mac OS systems, the license is generated, downloaded and installed automatically with the zip file. No extra step is needed.

When installing with Docker the process has to be done manually. To achieve this:

lke-data in our documentation example).license.key file.license.key file inside the previously created folder (lke-data in our example)./data folder in Linkurious Enterpriselicense.key file with a text editorIf your license with Linkurious has expired, please contact the sales team of Linkurious.

In case your license has already been renewed, you can update the license. To achieve this, either:

license.key file from get.linkurio.us. Copy the license.key file to the /data folder of your Linkurious Enterprise current instance (deleting the existing license.key file).Note: if using Docker please follow the guideline in the install the license section

Linkurious Enterprise is scanned continuously for vulnerabilities both in the application code and in third-party dependencies using static code analysis tools and dependency scanning tools.

While doing that, we regularly have Linkurious Enterprise tested by independent third-party security specialists to test it for exploitable vulnerabilities (a.k.a. pentesting).

When a security vulnerability is discovered in Linkurious Enterprise:

Our vulnerability reports include a severity level. This severity level is based on our self-calculated CVSS score (Common Vulnerability Scoring System) for each specific vulnerability. CVSS is an industry standard vulnerability metric (learn more about CVSS).

For CVSS v3, we use the following severity rating system:

| CVSS v3 score range | Report severity level |

|---|---|

| 0.1 - 3.9 | Low |

| 4.0 - 6.9 | Medium |

| 7.0 - 8.9 | High |

| 9.0 - 10.0 | Critical |

Below are a few examples of vulnerabilities which may result in a given severity level. Please keep in mind that this rating does not take into account details of your installation and are to be used as a guide only.

Critical severity vulnerabilities usually have most of the following characteristics:

For critical vulnerabilities, it is advised that customers patch or upgrade as soon as possible, unless you have other mitigating measures in place. For example, a mitigating factor could be if your installation is not accessible from the Internet.

High severity vulnerabilities usually have some of the following characteristics:

Medium severity vulnerabilities usually have some of the following characteristics:

Low severity vulnerabilities typically have very little impact on an organization's business. Exploitation of such vulnerabilities usually requires local or physical system access. Vulnerabilities in third party code that are unreachable from Linkurious Enterprise's code may be downgraded to low severity.

If you have discovered an unknown security vulnerability in Linkurious Enterprise (or in an associated product or service), please get in touch with us via email: security@linkurious.com

Before starting to explore the content of your graph database using Linkurious Enterprise, you need to import data in your graph database.

The following sections will help you import data into your graph:

If you already have data in your graph database or you don't need to import anything, see how to configure your graph database with Linkurious Enterprise.

Linkurious Enterprise relies on Neo4j to store data. To import data into Neo4j, you have a number of solutions depending on your needs.

If your data is static:

If you are streaming data, you can use Neo4j Streams Kafka Integration to ingest any kind of Kafka event into your graph.

For simple CSV files, to get you quickly up and running, you can use the official Linkurious CSV importer plugin to import data directly through Linkurious Enterprise. The plugin provides a simple user interface to upload CSV files and easily define relationships.

If you are using spreadsheets, you can easily transform the data within to Cypher queries with this tutorial.

Finally, If your data is in JSON format, you could use the

load JSON functionality from APOC

By default, a pre-loaded movie database is available in Neo4j Desktop.

You can also get started with Neo4j Sandbox and launch a free Neo4j online instance with an example dataset

If you are still not sure, or your data import needs are more complex, or if you need to get help from professionals, contact us and we will be happy to answer your questions.

Please refer to the Cosmos db online documentation for details on how to load data into Cosmos db.

We release a new version of Linkurious Enterprise every couple of month on average. New releases include new features, improvements, and bug fixes.

The following pages will help you check for updates, back-up Linkurious Enterprise before updating, and update Linkurious Enterprise.

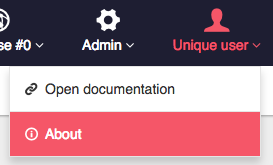

Using an administrator account, access the Your Username > About menu to open the Linkurious Enterprise info:

Click Check for updates to see if you are using the latest version.

Alternatively, you can check at http://linkurio.us/version/linkurious-enterprise.json

// example response "tag_name": "v3.0.14" // latest version of Linkurious Enterprise "message": null "url": "https://get.linkurio.us/" // where to download the latest version from Follow these steps to perform a backup of your Linkurious Enterprise data:

linkurious/data folderPlease note that this procedure does not back-up your graph data, but only your Linkurious Enterprise configuration and user-data (visualizations, users, etc.).

If you follow this procedure, you will be able to update Linkurious Enterprise to a newer version without loosing your configuration and user-data store. You data will be automatically migrated to the newer version.

Before updating, make sure that you have backed-up your data in case something unexpected happens.

During the update procedure, if Linkurious Enterprise is running, it will be stopped. You will need to re-start Linkurious Enterprise after the update procedure.

linkurious-xxx-v3.0.14.zip into the root folder of your working Linkurious Enterprise directory (along the start stop and update scripts)update.sh, OSX: update.sh.command, Windows: update.bat)Even though the above procedure is the standard one for a general upgrade, some version (especially major releases) can introduce changes requiring extra attention. Please browse our public resources to verify whether there is something applicable to your specific configuration.

If you use the Linkurious Enterprise docker image, the only step to update Linkurious Enterprise is to use the new docker image with the existing Linkurious Enterprise data volume.

If the update script fails, please check the update log located

at linkurious/data/update.log for details.

A data-source is a conceptual representation of a graph database within Linkurious Enterprise. Visualizations and other user data created within Linkurious Enterprise will be associated their respective data-source.

Every data-source is uniquely identified with a sourceKey,

a string computed from the url and the store id of the database.

Modifying the url or regenerating a database will result in Linkurious Enterprise generating a new sourceKey, consequently creating a new data-source with new information.

If this behaviour is not desirable, consider using a stable sourceKey.

By default, Linkurious Enterprise is configured to connect to a Neo4j database at 127.0.0.1:7474.

Linkurious Enterprise can connect to some of the the most popular graph databases:

Linkurious Enterprise is able to connect to several graph databases at the same time and lets you switch from one database to another seamlessly.

You can configure your data-sources via the Web user interface

or directly on the linkurious/data/config/production.json file.

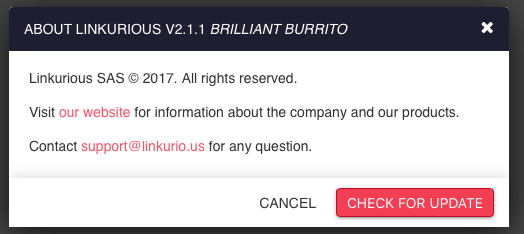

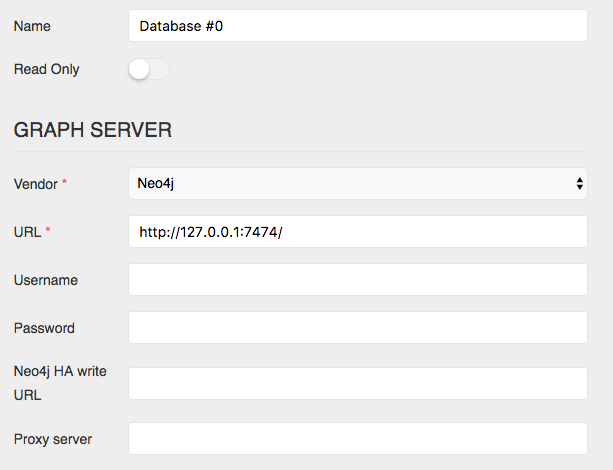

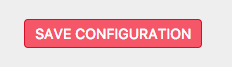

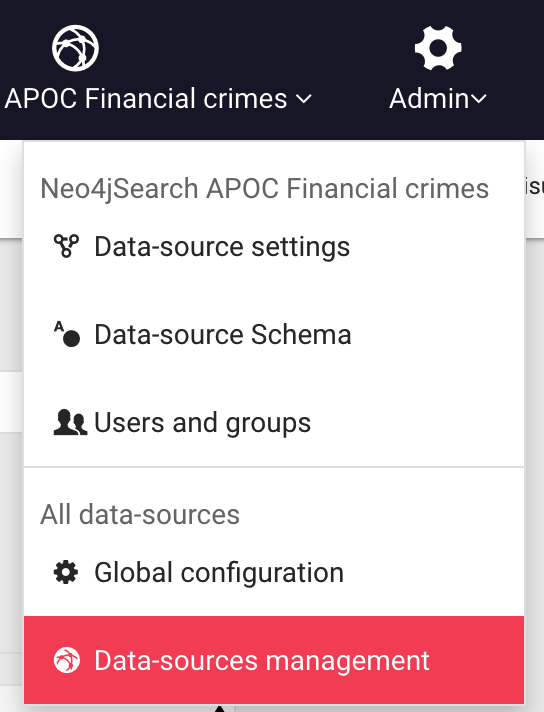

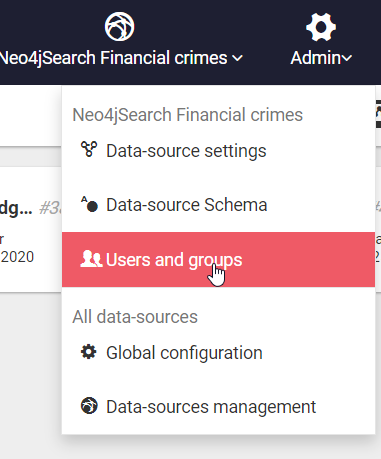

Using an administrator account, access the Admin > Data menu to edit the current data-source configuration:

Edit the data-source configuration to connect to your graph database:

Submit the changes by hitting the Save configuration button.

Edit the configuration file located at linkurious/data/config/production.json.

See details for each supported graph database vendor:

Neo4j is supported since version 3.2.0.

Note that Neo4j 4.0.0 and 4.0.1 are not supported. Neo4j 4 is supported since version 4.0.2.

To edit the Neo4j data-source configuration,

you can either use the Web user-interface

or edit the configuration file located at linkurious/data/config/production.json.

Example configuration:

"dataSources": "graphdb": "vendor": "neo4j" "url": "http://127.0.0.1:7474/" "user": "myNeo4jUser" "password": "nyNeo4jPassword" "index": "vendor": "neo4jSearch" Linkurious can connect to Neo4j via the

Boltprotocol. To do so, you need to enable the protocol in your Neo4j configuration file. If Linkurious is connected over HTTP/S, it will try to automatically upgrade the connection to Bolt. The HTTP/S protocol is still required to perform a small subset of operations.

Supported graphdb options with Neo4j:

url (required): URL of the Neo4j server (HTTP / HTTPS / bolt / neo4j)user (optional): Neo4j user (if credentials are enabled, see Neo4j credentials)password (optional): Neo4j password (if credentials are enabled)proxy (optional): URL of the HTTP proxy to use to connect to Neo4j (only used when url is HTTP/S)alternativeNodeId (optional): Name of the node property to use as reference in visualizations (see alternative IDs)alternativeEdgeId (optional): Name of the edge property to use as reference in visualizationslatitudeProperty (optional): Name of the node property to use for latitude (used in geo mode)longitudeProperty (optional): Name of the node property to use for longitude (used in geo mode)allowSelfSigned (optional, default false): Whether to allow self-signed certificatesdatabaseName (optional for v4.x.x): Name of the database to be connectedallowVirtualEntities (optional, default true): Whether to allow virtual nodes and virtual edgesalternativeURLs (optional for v4.x.x): Linkurious accepts a string array consisting of alternative Neo4j Bolt URLs for high availabilityhttpUrl (optional): Neo4j HTTP url needed to connect with a "architect" userignoredEdgeTypePrefixes (optional): List of edge type prefixes to be excluded in the schema samplingFor Neo4j v4 and later, Linkurious Enterprise provides full support for Neo4j connections over Bolt.

Linkurious Enterprise will use Full Bolt connector when it is provided with any of following

urlprefixes:bolt,neo4j,neo4j+s

It is recommended to use Full Bolt connector for Neo4j v4 and later.

Linkurious Enterprise allows using Neo4j instances running on Neo4j Aura as data-sources.

Neo4j Aura is only supported for the Neo4j Aura instances running Neo4j engine v4.0 and later.

In order to have full-text search, you can choose among the following options:

If you just installed Neo4j, these steps will help you create credentials:

Alternatively, you can disable credentials in Neo4j by editing the Neo4j configuration at neo4j/conf/neo4j.conf

by uncommenting the following line:

dbms.security.auth_enabled=falseNote that Linkurious requires a Neo4j user with the Neo4j built-in

adminorarchitectrole. This is needed so that Linkurious can use Neo4j features like Index creation and Query cancellation.

If you are connecting via an architect role to Neo4j 3.5, it's necessary to explicitly specify both the bolt url and the http url via the url and httpUrl configuration keys respectively.

Configuring alternative IDs indices is only possible from from Neo4j 3.5.1 and it is recommended to do so.

The first step is to:

myAlternativeNodeIdIndex['Company', 'Person']myUniqueNodeIdOnce we have this information we can create the indices with the following Cypher queries:

call db.index.fulltext.createNodeIndex('myAlternativeNodeIdIndex', ['Company', 'Person', 'City'], ['myUniqueNodeId'], {analyzer: 'keyword'})

call db.index.fulltext.createRelationshipIndex('myAlternativeEdgeIdIndex', ['WORKS_FOR', 'LIVES_IN'], ['myUniqueEdgeId'], {analyzer: 'keyword'})

If new node labels or edge types are added to Neo4j, it's necessary to recreate these indices with the full list of categories.

Once the indices are created, we can configure them Linkurious:

Example configuration:

"dataSources": "graphdb": "vendor": "neo4j" "url": "http://127.0.0.1:7474/" "user": "myNeo4jUser" "password": "nyNeo4jPassword" "alternativeNodeId": "myUniqueNodeId" "alternativeNodeIdIndex": "myAlternativeNodeIdIndex" "alternativeEdgeId": "myUniqueEdgeId" "alternativeEdgeIdIndex": "myAlternativeEdgeIdIndex" "index": "vendor": "neo4jSearch" Cosmos DB is supported by Linkurious.

To edit the Cosmos DB data-source configuration,

you can either use the Web user-interface

or edit the configuration file located at linkurious/data/config/production.json.

Example configuration:

"dataSources": "graphdb": "vendor": "cosmosDb" "url": "https://your-service.gremlin.cosmosdb.azure.com:443/" ".NET SDK URI": "https://your-service.documents.azure.com:443/" "database": "your-graph-database" "collection": "your-collection" "primaryKey": "your-account-primary-key" "partitionKey": "your-collection-partition-key" "index": "vendor": "azureSearch" "url": "https://your-search-service.search.windows.net" "apiKey": "your-search-service-admin-api-key" "nodeIndexName": "your-node-index" "edgeIndexName": "your-edge-index" Supported graphdb options for Cosmos DB:

url (required): This is the full Gremlin Endpoint of your Cosmos DB. Should not to be confused with the .NET SDK URI.NET SDK URI (required): The .NET SDK URI of your Cosmos DBdatabase (required): Cosmos DB databasecollection (required): Cosmos DB collectionprimaryKey (required): Cosmos DB account primary keypartitionKey (required): The partition key of your Cosmos DB collectionalternativeNodeId (optional): Name of the node property to use as reference in visualizations (see alternative IDs)alternativeEdgeId (optional): Name of the edge property to use as reference in visualizationslatitudeProperty (optional): Name of the node property to use for latitude (used in geo mode)longitudeProperty (optional): Name of the node property to use for longitude (used in geo mode)allowSelfSigned (optional, default false): Whether to allow self-signed certificatesIn order to have full-text search, you can choose among the following options:

When you save a visualization in Linkurious Enterprise, only the node and edge identifier are persisted in the user-data store, along with position and style information. When a visualization is loaded, the node and edge identifiers are used to reload the actual node and edge data from the graph database.

If you need to re-generate your graph database from scratch, the graph database will probably generate new identifiers for all nodes and edges, breaking all references to nodes and edges in existing visualizations.

You can configure Linkurious Enterprise to use a node or edge property as stable identifiers. Once set-up, Linkurious Enterprise will use the given property as identifier instead of using the identifiers generated by the database.

Thank to this strategy, visualizations will be robust to graph re-generation.

Notice that the properties used as identifier should be indexed by the database to allow for a fast lookup by value.

To use alternative node and edge identifiers,

edit your data-source database configuration in the configuration file (linkurious/data/config/production.json):

Example of alternative identifier configuration with Neo4j:

"dataSources": "graphdb": "vendor": "neo4j" "url": "http://127.0.0.1:7474/" "alternativeNodeId": "STABLE_NODE_PROPETY_NAME" "alternativeEdgeId": "STABLE_EDGE_PROPETY_NAME" // [...] Configuring alternative ids indices is only possible with Neo4j 3.5.1 and above. If you plan on using a compatible Neo4J version and alternative ids, we recommend configuring alternative ids indices.

To achieve better performance with alternative Ids is recommended to configure indices for alternative node and edge Ids.

Refer to the documentation specific to Neo4j on how to configure these indices.

Linkurious Enterprise generates a unique identifier for data-source, based on internal information from the data-source.

This data-source identifiers (called the sourceKey) is used to identify all user-data (visualizations etc.)

that belongs to a data-source.

When re-generating a Neo4j graph database, for example, the sourceKey will change.

In order to avoid breaking all references to existing visualizations,

it is possible to set manually the sourceKey of a data-source.

Before re-generating the graph database, go to the Admin > Sources menu:

In the sources administration panel, find the Key value for your data-source (e.g. 1c3490bd) and copy it.

Then, edit the configuration file (linkurious/data/config/production.json)

and set the key manualSourceKey for your data-source:

"dataSources": "manualSourceKey": "1c3490bd" "graphdb": "vendor": "neo4j" "url": "http://127.0.0.1:7474/" // [...] We recommend using a manual sourceKey if you need to re-generate a database with existing user data (visualizations etc.), or when the hostname and port number of your data-source are likely to change after the first connection to Linkurious Enterprise.

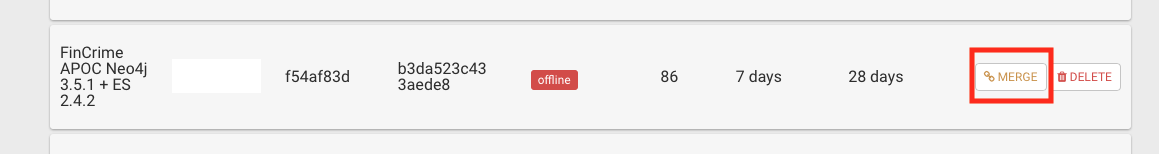

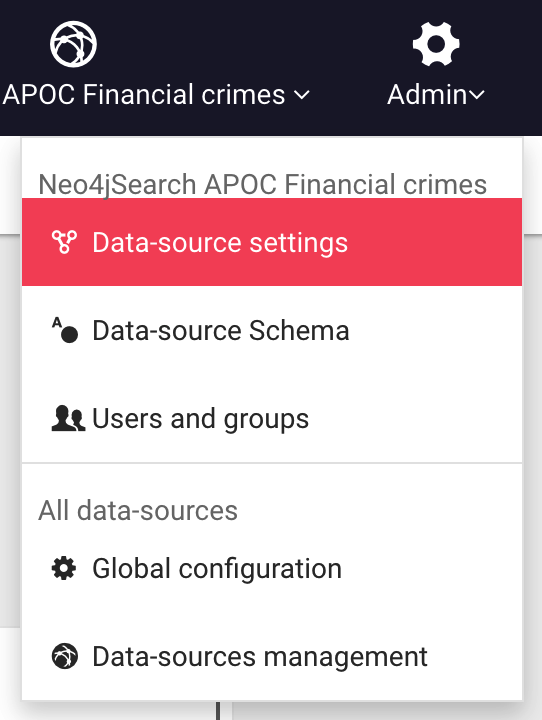

Alternatively, you have the possibility to merge the data-sources directly from the data-source management page with the following steps:

From the dashboard, go to the Admin > Data-sources management menu

The data-source to be merged should be the old data-source now marked as offline because it has been replaced with the freshly generated data-source.

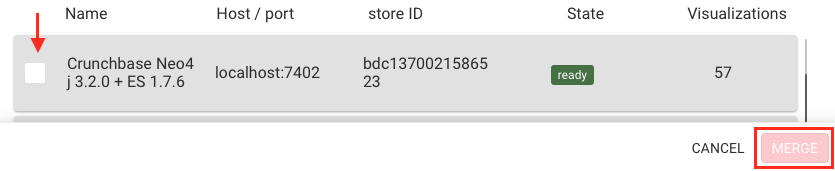

On the resulting modal, select the new data-source then click on the merge button.

The user can choose to perform a normal merge, or an overwrite merge.

As a result, the following objects from the old data-source will be merged in the new data-source:

The Data-Source won't be deleted in case the user decides to do an overwrite merge at a later stage.

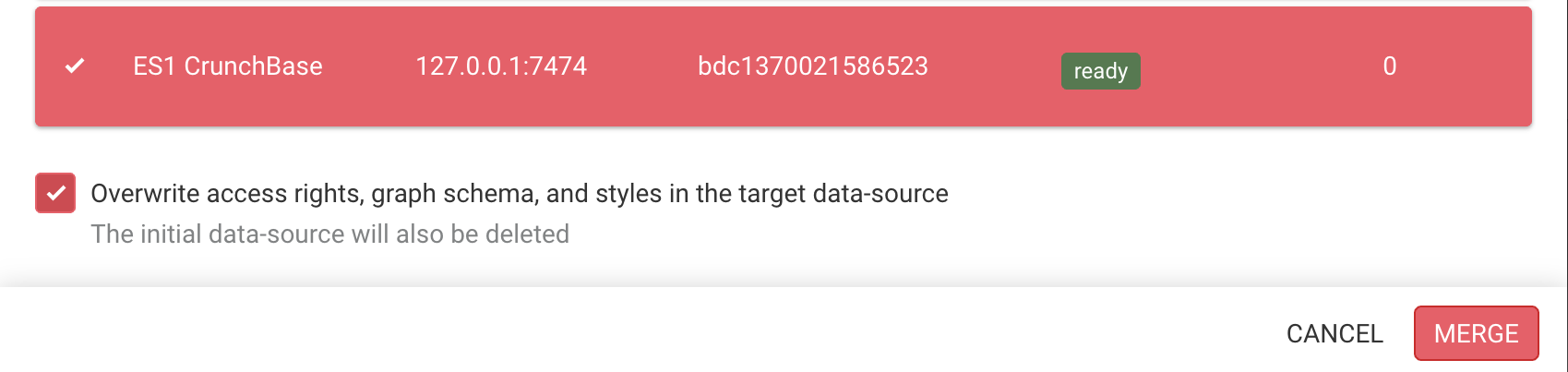

To perform an overwrite merge simply select the overwrite check box in the merge modal.

As a result, the objects from the old data-source mentioned above will be merged in the new data-source. Additionally, the following objects will be replaced in the new data-source with the ones from the old data-source:

This action will irreversibly remove any data associated with the old data-source, and the data-source will be deleted.

The following advanced data-source settings applies to all data-sources.

To change them, see how to configure Linkurious Enterprise.

dataSourceConnectionTimeout (default: 30): The maximum time in seconds before the connection attempt to each data-source times out.

dataSourceAutoReconnectInterval (default: 300): Number of seconds after which Linkurious will try to reconnect automatically to all offline data-sources.

This value is internally converted in minutes; if it is not a multiple of 60, it will be rounded to the nearest number of minutes.

Explicitly setting this parameter to 0 disable the offline data-sources auto-reconnection feature.

pollInterval (default: 10): Check if the data-sources and search engine are connected at each interval (in seconds).

defaultTimezone (default: Z): The timezone in which to represent date and datetime values

timeline (default: false): Whether the visualization timeline is available in the workspace UI

layoutWorkers (default: 2): Number of processes dedicated to computing server-side the layout of visualizations (Currently only available via Linkurious APIs)

indexationChunkSize (default: 5000): The number of nodes and edges retrieved at each batch during the indexation of the graph database.

searchAddAllThreshold (default: 500): The maximum number of search results that the user can add to a Visualization at once.

minSearchQueryLength (default: 3): The number of characters needed to trigger a search query. Set 1 to provide live results from the first character typed by the user.

searchPrefixExpansion (default: true): Whether Linkurious should return nodes and edges that contain the search query as a prefix, e.g.: Searching Link will return node Linkurious.

supernodeThreshold (default: 10000): Number of adjacent edges after which a node is considered a supernode.

edgesBetweenSupernodes (default: false): Whether Linkurious should retrieve edges between 2 supernodes. Note: Linkurious always return edges between a supernode and a regular node.

rawQueryTimeout (default: 60000): Milliseconds after which a query to the database will time out.

defaultFuzziness (default: 0.1): Default value to search fuzziness between 0 and 1. A value of 0 means exact matching of the search query.

expandThreshold (default: 50): When the user expands a node with too many neighbors, Linkurious Enterprise will ask to refine the query so that fewer neighbors are returned.

rawQueryLimit (default: 500): The maximum number of results returned by Linkurious Enterprise when executing a query or query template.

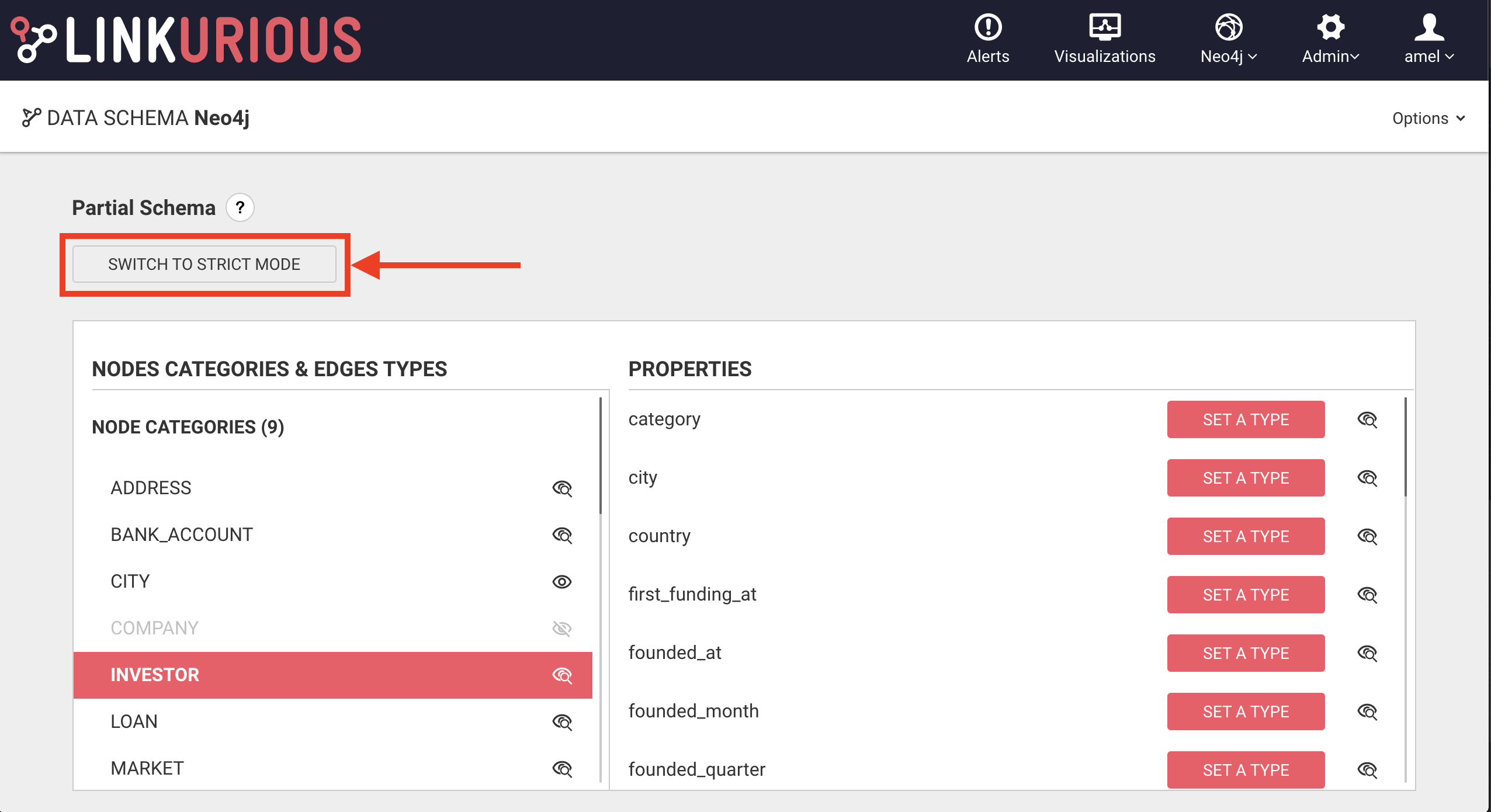

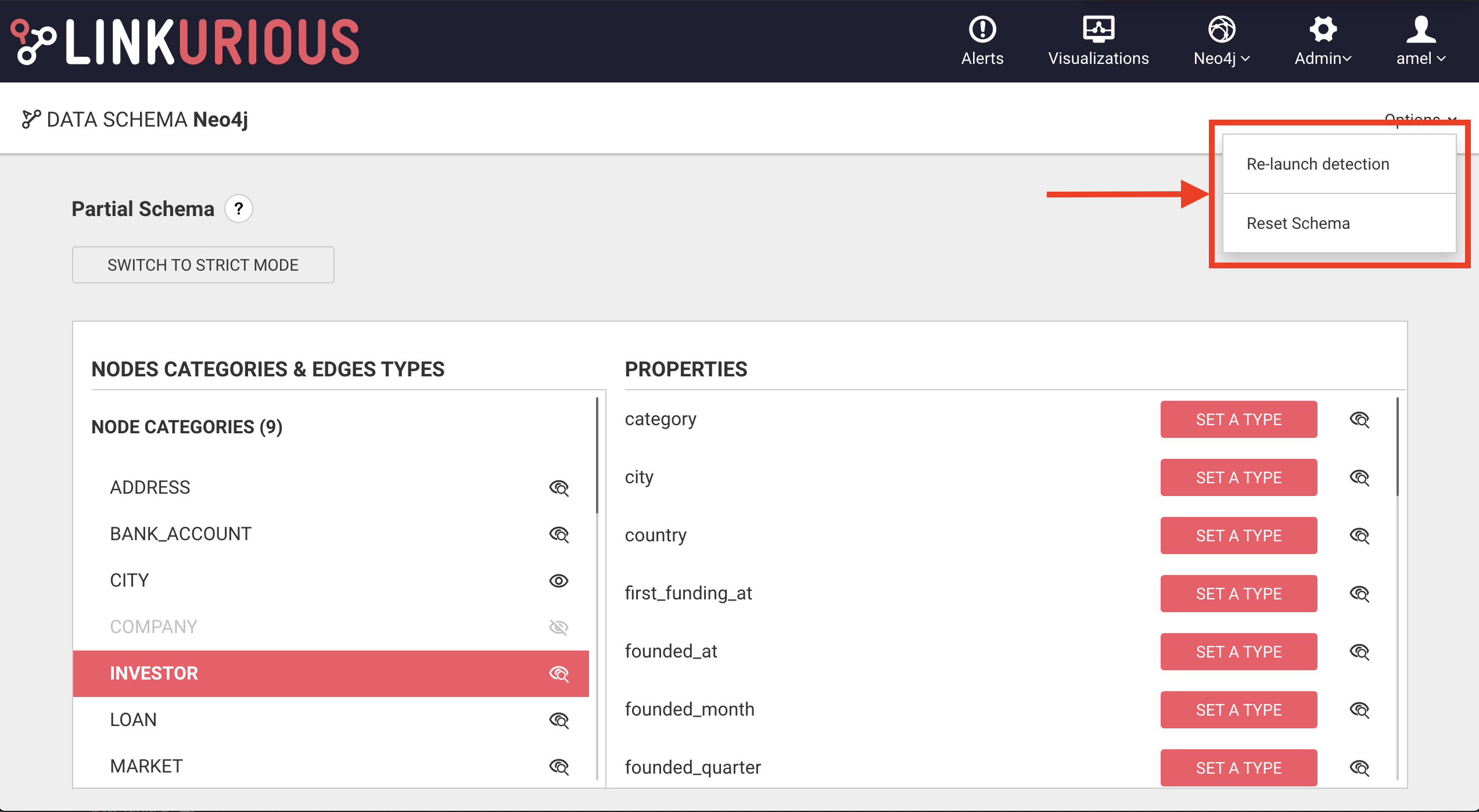

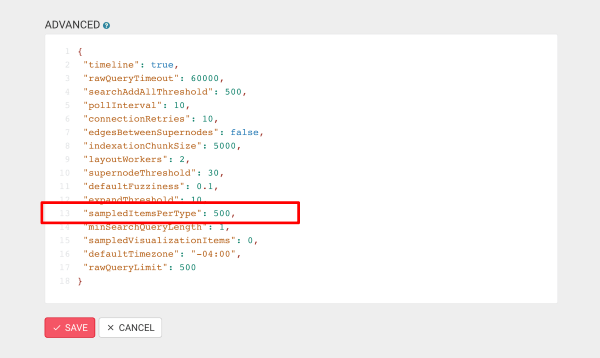

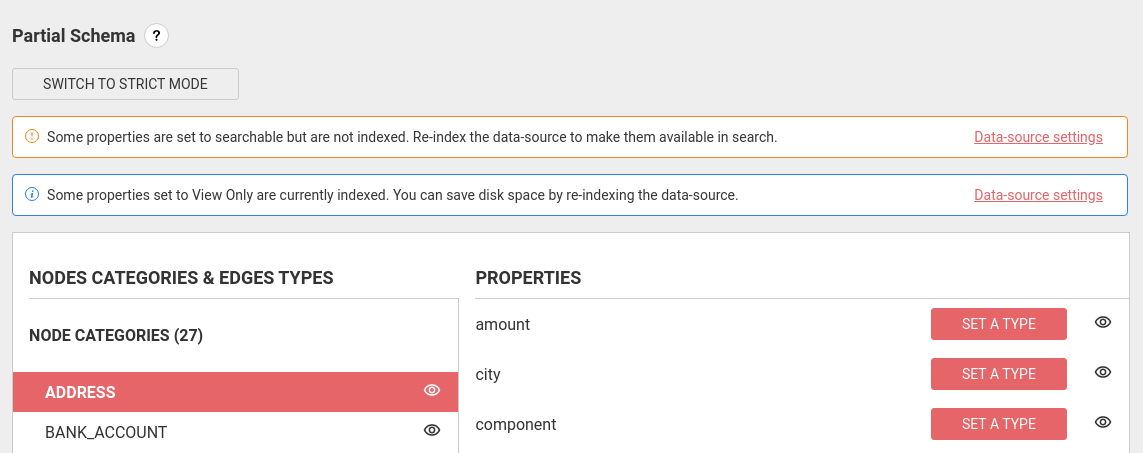

sampledItemsPerType (default: 1000): The number of nodes/edges per category/type to read from the graph for the schema sampling

sampledVisualizationItems (default: 1000): Number of nodes/edges to read from existing visualizations for the schema sampling

showBuiltinQueries (default: true): Whether built-in queries like Shortest Path should appear in the list of Graph Query templates.

slowQueryThreshold (default: 500): Milliseconds after which a query is logged in the log file as a slow query.

defaultLayout (default: {algorithm: 'force', mode: 'best'}): The default layout to be applied to visualizations.

defaultLayout.algorithm (default: force): Can be force or hierarchical.defaultLayout.mode (default: best): (best|fast) for force algorithm and (LR | RL | TB | BT) for hierarchical algorithm. See the layout section in the user manual for more information.searchRetryMultiplier (default: 5): During search, update the index by removing the missing items from the index server. The higher the multiplier is, the less chance search will return non-existing items but search might take more time.

extraCertificateAuthorities: The relative or absolute path to a PEM file. When set, the well known "root" CAs

(like LetsEncrypt) will be extended with the extra certificates in the

file. The file should consist of one or more trusted certificates in the PEM format.

If the path is relative, the file should be located within the linkurious/data folder.If Linkurious Enterprise is installed as a service, the service needs to be uninstalled and re-installed for the change to be taken into account.

obfuscation (default: false): Set to true if you want all the passwords in the configuration to be obfuscated at the next restart of Linkurious Enterprise.Linkurious Enterprise allows you to search your graph using natural full-text search.

In order to offer the search feature out-of-the-box, Linkurious Enterprise ships with an embedded Elasticsearch server. This option allow for zero-configuration deployment in many cases.

By default, Linkurious Enterprise uses Elasticsearch for search. This options requires Linkurious Enterprise to index your graph database, which technically means that Linkurious Enterprise will feed the whole content of the graph database to Elasticsearch to make it searchable. The time required to index the graph database increases with the size of the graph and this solution has limits in its scalability.

Indexation typically happens at speeds between 2000 and 20000 nodes or edges per second, depending on the number of properties for nodes and edges, and hardware performances.

By default, Linkurious Enterprise ships with an embedded Elasticsearch server (version 7.10.2).

This server only listens for local connections on a non-default port (it binds to 127.0.0.1:9201),

for security reasons and to avoid collisions with existing servers.

It is possible to use your own Elasticsearch cluster for performances reasons. Linkurious Enterprise supports Elasticsearch v1.x and v2.x. See details about Elasticsearch configuration options.

Using Elasticsearch is convenient but may not fit cases where the graph database is big (more than a couple million nodes and edges) and is regularly modified from outside Linkurious Enterprise, which required to re-index the whole database.

In order to offer a scalable search feature on big graphs, Linkurious Enterprise offers alternatives search solution. See details about the different options.

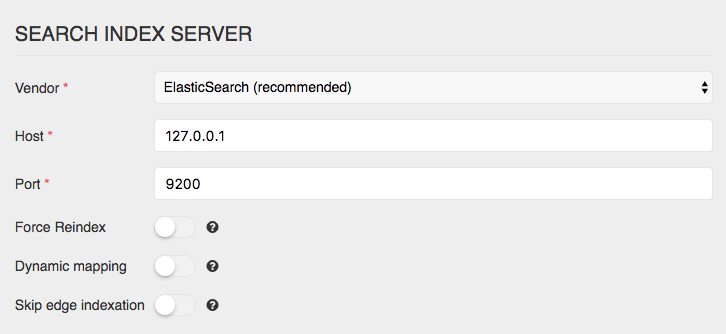

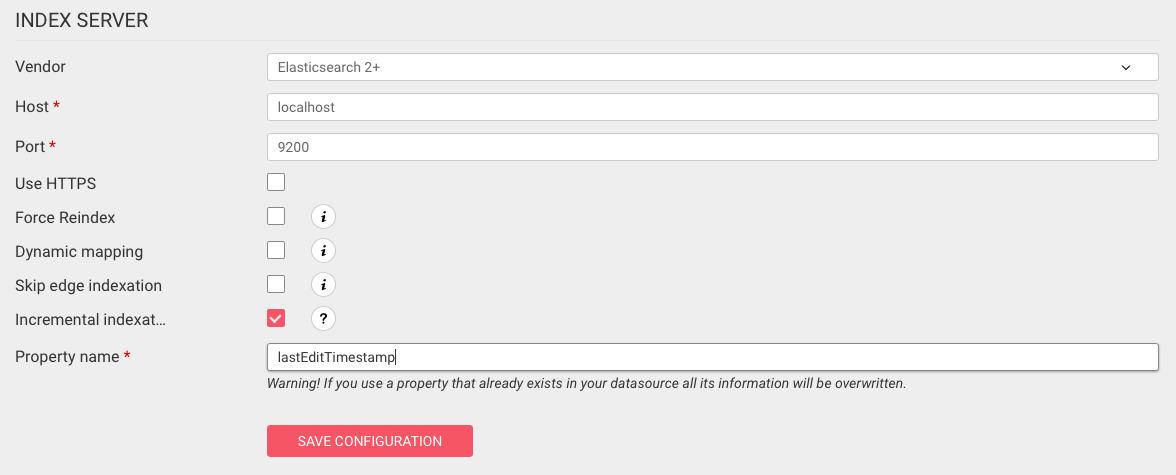

You can configure your search engines via the Web user interface

or directly on the linkurious/data/config/production.json file.

Using an administrator account, access the Admin > Data menu to edit the current data-source configuration:

Edit the search engine configuration to connect to your graph database:

Submit the changes by hitting the Save configuration button.

Edit the configuration file located at linkurious/data/config/production.json.

See details for each supported search connector.

| Feature | Description |

|---|---|

| Onboarding | Does this search option require additional configuration, or can it be used out-of-the-box with the associated graph database? |

| Fast indexation | How fast is indexation? Note that this is a relative metric; while some search options may be faster than others, speed will depend on the complexity of your data model and your hardware limitations. |

| Automatic index sync | Are changes made to the graph DB propagated to the index automatically? |

| Search scalability | How well search queries perform for large graph databases? The actual upper limit on the performance of a given option will vary from vendor to vendor. |

| Advanced search | If advanced search features are available, such as numerical and date range search operators. |

| Onboarding | Fast indexation | Automatic index sync | Search scalability | Advanced search | |

|---|---|---|---|---|---|

| Embedded Elasticsearch | Plug-and-play | No | No | Won't scale beyond ~100M nodes | Yes (requires configuration) |

| External Elasticsearch (v2+) | Requires Elasticsearch installation and configuration | No | No | Yes (by adding hardware to Elasticsearch cluster) | Yes (requires configuration) |

| Neo4j-to-Elasticsearch | Requires Elasticsearch installation and configuration + Neo4j plugin installation and configuration | Yes (up to 10,000 nodes/second) | Yes | Yes (by adding hardware to Elasticsearch cluster) | Yes |

| Neo4j Search (v3.5.1+) | Requires configuration in Neo4j (properties to index must be specified) | Yes | Yes | Limited | No |

| Elasticsearch Incremental Indexing | Requires External Elasticsearch (versions compatible with Linkurious Enterprise) and Neo4j v3.5.1 and above | Yes (except the first full indexation) | Yes (requires configuration) | Yes (by adding hardware to Elasticsearch cluster) | Yes (requires configuration) |

| Onboarding | Fast indexation | Automatic index sync | Search scalability | Advanced search | |

|---|---|---|---|---|---|

| AzureSearch | Requires AzureSearch setup (easy) | Yes | Yes | Yes | No |

| Embedded Elasticsearch | Plug-and-play | No | No | Won't scale beyond ~1M nodes | Yes (requires configuration) |

| External Elasticsearch (v2+) | Requires Elasticsearch installation and configuration | No | No | Won't scale beyond ~1M nodes | Yes (requires configuration) |

The neo4jSearch connector is a solution for full-text search with Neo4j.

neo4jSearch is supported since version 3.5.1 of Neo4j.

Linkurious can use the builtin search indices managed by Neo4j itself.

You can either use the Web user-interface

or edit the configuration file located at linkurious/data/config/production.json to set the index.vendor property to the value neo4jSearch.

To edit the Neo4j data-source configuration,

you can either use the Web user-interface

or edit the configuration file located at linkurious/data/config/production.json.

Example configuration:

"dataSources": "graphdb": "vendor": "neo4j" "url": "http://127.0.0.1:7474/" "user": "myNeo4jUser" "password": "nyNeo4jPassword" "index": "vendor": "neo4jSearch" "indexEdges": true Supported index options with Neo4jSearch:

indexEdges (optional): Whether to create or use an edge indexNote that, in Neo4jSearch, only fields stored in Neo4j as string will be searchable. Numerical and date properties won't be searchable if stored in Neo4j as numbers or native dates.

Neo4j-to-elasticsearch is a Neo4j plugin that enables automatic synchronization between Neo4j and Elasticsearch. This means that all changes to Neo4j are automatically propagated to Elasticsearch.

Neo4j-to-elasticsearch plugin is not compatible with Neo4j v4.x.

Follow these steps to install the plugin:

A.B.C.x where A.B.C matches your Neo4j version and x is 44 or laterA.B.C.x.y where A.B.C matches your Neo4j version and x.y is 44.8 or latergraphaware-server-community-all-A.B.C.x.jar and graphaware-neo4j-to-elasticsearch-A.B.C.x.y.jar to your neo4j/plugins directoryneo4j/conf/neo4j.conf):com.graphaware.runtime.enabled=truecom.graphaware.module.ES.1=com.graphaware.module.es.ElasticSearchModuleBootstrappercom.graphaware.module.ES.uri=HOST_OF_YOUR_ELASTICSEARCH_SERVERcom.graphaware.module.ES.port=PORT_OF_YOUR_ELASTICSEARCH_SERVERcom.graphaware.module.ES.mapping=AdvancedMappingcom.graphaware.module.ES.keyProperty=com.graphaware.module.ES.retryOnError=truecom.graphaware.module.ES.asyncIndexation=truecom.graphaware.module.ES.initializeUntil=2000000000000 # Set "relationship" to "(false)" to disable relationship (edge) indexation. # Disabling relationship indexation is recommended if you have a lot of relationships and don't need to search them. com.graphaware.module.ES.relationship= com.graphaware.runtime.stats.disabled=truecom.graphaware.server.stats.disabled=trueneo4j/conf/neo4j.conf:com.graphaware.module.ES.initializeUntil=2000000000000Please note that indexation could fail if your data uses different data types for the same property key. For example, if a property representing a date uses ISO strings in some nodes and timestamps in others. If you encounter this issue, please get in touch.

initializeUntilThe initializeUntil specification is used to trigger the indexation of existing Neo4j data in Elasticsearch. This is because com.graphaware.module.ES.initializeUntil must

be set to a number slightly higher than a Java call to System.currentTimeInMillis() would normally return

when the module is started. Thus, the database will be (re-)indexed only once, and not with every subsequent restart.

In other words, re-indexing will happen if

System.currentTimeInMillis() < com.graphaware.module.ES.initializeUntil.

Once the neo4j-to-elasticsearch plugin is installed, you need to change

the relevant data-source configuration to use neo2es as its search index vendor.

You can either use the Web user-interface

or edit the configuration file located at linkurious/data/config/production.json to set the index.vendor property to the value neo2es.

For smaller indexation tasks, neo4j-to-elasticsearch can be used straight out of the box. Larger indexes are trickier. If you find that indexation is failing to complete, or that search is unusually slow, you will need to settle for partial indexation in order to keep Elasticsearch usable.

Partial indexation can be configured in your Neo4j configuration file (neo4j/conf/neo4j.conf) by specifying a subset of your graph to index. You can do this by selecting which types of nodes and relationships to keep and which properties to index on these nodes.

(For a detailed list of configuration options, please consult Neo4j-to-elasticsearch's official documentation, available at Graphaware's Github repo. This guide will focus on a few use cases that should be adaptable to a wide variety of graph models.)

Partial indexation is handled by four options which can be added to your Neo4j configuration file:

com.graphaware.module.ES.node=...com.graphaware.module.ES.node.property=...com.graphaware.module.ES.relationship=...com.graphaware.module.ES.relationship.property=...com.graphaware.module.ES.node and com.graphaware.module.ES.relationship control which nodes and relationships to index. com.graphaware.module.ES.node.property and com.graphaware.module.ES.relationship.property control which properties of these nodes and relationships to index.

Each of these lines is followed by one or more parameters. Parameters are boolean expressions. They can be chained together using standard logical operators && (AND) and || (OR). The most basic parameters are (true) and (false). They will tell Elasticsearch either to index everything (the default behavior) or to index nothing. Note that the parentheses are required in order to force Elasticsearch to ignore these nodes while loading your database into the index.

To reiterate, if we add the line

com.graphaware.module.ES.relationship=(false)to our configuration file, Elasticsearch will not index any of the relationships in our database.

The next step up is parameter functions. These allow for more complex inclusion and exclusion rules. For both nodes and relationships, the following two functions are available:

getProperty('propName', 'defaultValue'): Returns a property value, allowing you to compare it to another value using standard comparison operators.hasProperty('propName'): Returns true or false depending on whether a node or relationship contains a certain property.Additionally, there are functions specific to nodes and to relationships. For nodes, those that are useful for partial indexation are:

getDegree(): Returns the degree (number of relationships) of a node. Can be used with comparison operators.hasLabel('labelName'): Returns true or false depending on whether the specified label is present on a node.And for relationships, they are:

isType('typeName'): Returns true or false depending on whether the specified type is present on a relationship.isOutgoing(): Returns true or false depending on whether a relationship is outgoing.isIncoming(): Returns true or false depending on whether a relationship is incoming.(You can find a full list of functions in Graphaware's inclusion policies maintained on their Github repo.)

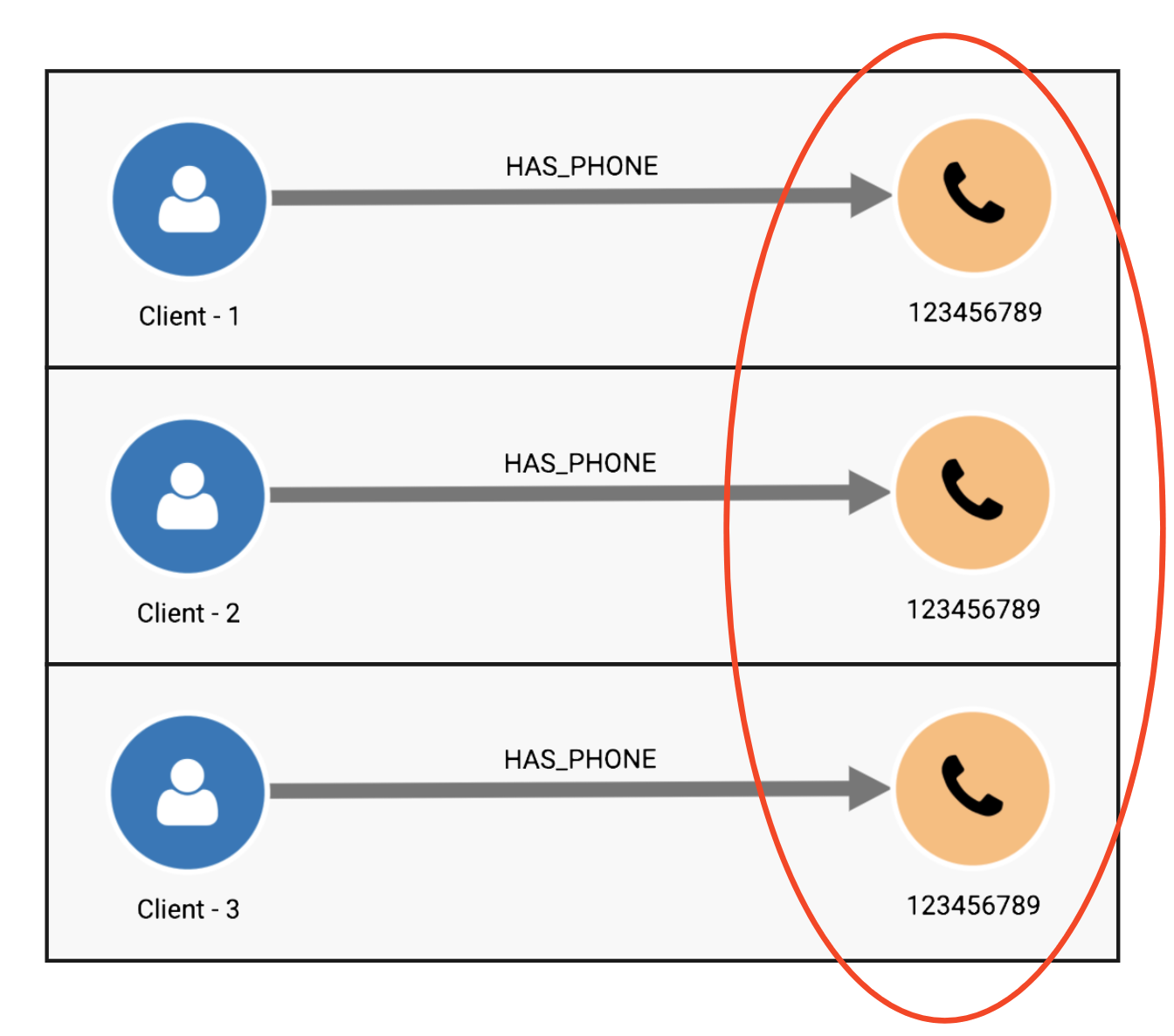

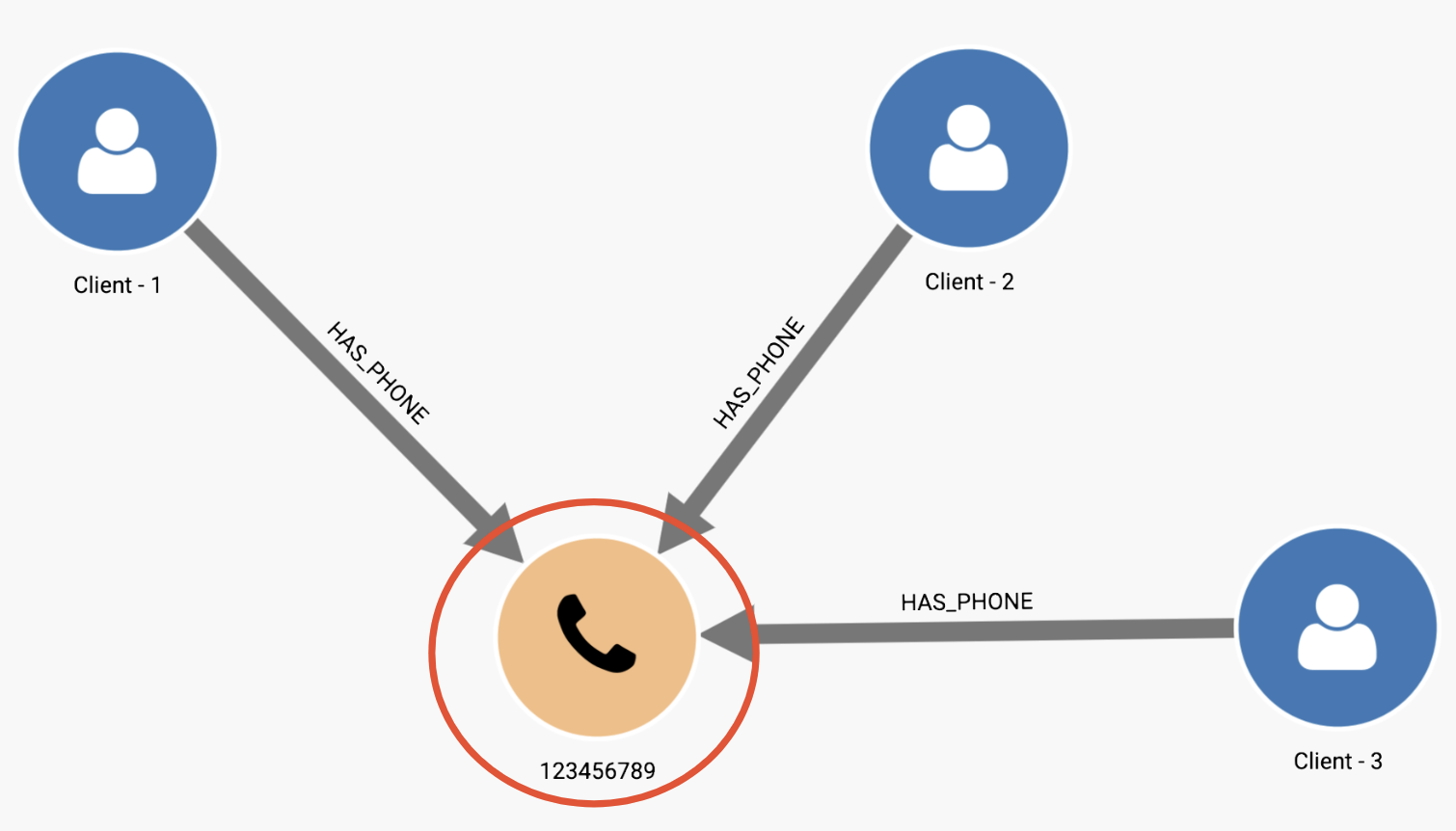

Say we have a due diligence database containing people, banks, account numbers, addresses, telephone numbers, and email addresses. It's a large database -- several hundred million nodes -- so to fully index the graph would be a lengthy process, and may ultimately be unnecessary if we are only interesting in full-text search on a restricted set of node and relationship properties.

The first question we should ask is about this data we are interested in. Maybe as part of our hypothesis about the data, we want to focus on a certain subset of connections that we believe form patterns of interest.

Let's say that we want to focus on identifying information only -- we think that there are cases where this information is shared by multiple individuals, for instance, and we're interested in analyzing them. The nodes of interest to us will therefore be those nodes which represent pure identifiers and not entities themselves -- addresses, telephone numbers, account numbers, and email addresses. We can tell Elasticsearch to index these and only these by adding the following line to our Neo4j configuration file:

com.graphaware.module.ES.node=hasLabel('Address') || hasLabel('Telephone') || hasLabel('Email') || hasLabel('Account')(com.graphaware.module.ES.node=!hasLabel('Person') && !hasLabel('Bank') will also work.)

And since we aren't including people or banks, we also want to focus on the relationships relevant to our nodes of interest:

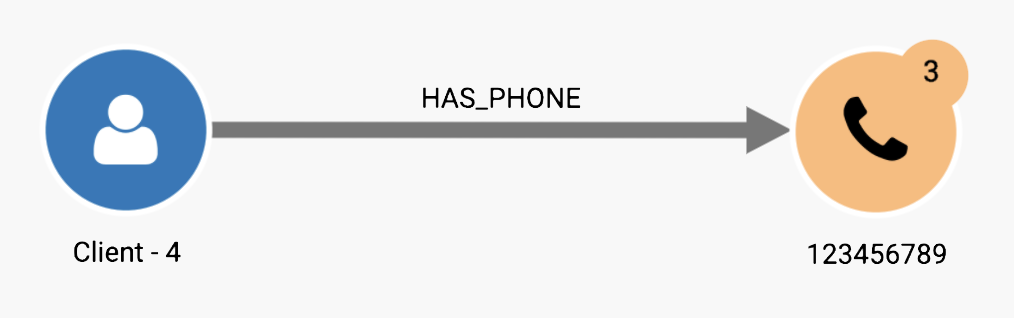

com.graphaware.module.ES.relationship=isType('HAS_ADDRESS') || isType('HAS_PHONE') || isType('HAS_EMAIL') || isType('HAS_ACCOUNT')If we want to be even more specific, we can select only those properties which are relevant to our inquiry by adding com.graphaware.module.ES.node.property to our config. We follow this with a list of keys, or node property names, (separated by || (OR) statements) that we want to include in our index:

com.graphaware.module.ES.node.property=key == 'address1' || key == 'city' || key == 'state' || key == 'number' || key == 'email' || key == 'accountNumber'And if we only want to index nodes and NOT relationships, we can disable relationship indexation completely:

com.graphaware.module.ES.relationship=(false)Since relationships are often more numerous than nodes, excluding them from our index can significantly reduce its storage footprint.

What if we finish our initial analysis and conclude that we need to know more about the financial institutions in our graph? We want to add banks to our index, but we don't need to search everything that is stored on them. Furthermore, we're only interested in banks in a certain region -- Europe, say. We can add the right banks back by modifying our original directive to read:

com.graphaware.module.ES.node=hasLabel('Address') || hasLabel('Telephone') || hasLabel('Email') || hasLabel('Account') || (hasLabel('Bank') && getProperty('bankRegion', 'None') == 'Europe')And we can add a few bank properties like this:

com.graphaware.module.ES.node.property=key == 'address1' || key == 'city' || key == 'state' || key == 'number' || key == 'email' || key == 'accountNumber' || key == 'bankName' || key == 'bankIdentifier' || key == 'bankRegion'Keep in mind that these keys will be indexed for every node on which they appear. If banks have a state property, for example, it will be added to the index for state. It's worth remembering this when constructing your data model. Namespace collisions can prove computationally costly.

Neo4j-to-Elasticsearch, in combination with partial indexation strategies, can be a very efficient way of handling index synchronization for large graphs. Even if your graph is small enough to index in full, it's worth considering to what extent it may grow. By examining your problem space and choosing only a subset of the information available to you for your traversal needs, you may save yourself future headaches and maximize the efficiency of your graph.

Azure search is the recommended full text search solution for Cosmos DB.

Linkurious requires an index on nodes to perform a search. If you do not have a configured index yet, you can create one via the azure portal.

Additionally, you can create an index on edges if you want search them as well with Linkurious.

Please review the description of each index attributes and make sure the label field is marked

as filterable. Linkurious will not be able to use the index otherwise.

To edit the AzureSearch data-source configuration,

you can either use the Web user-interface

or edit the configuration file located at linkurious/data/config/production.json.

Example configuration:

"dataSources": "graphdb": "vendor": "cosmosDb" "url": "https://your-service.gremlin.cosmosdb.azure.com:443/" "database": "your-graph-database" "collection": "your-collection" "primaryKey": "your-account-primary-key" "index": "vendor": "azureSearch" "url": "https://your-search-service.search.windows.net" "apiKey": "your-search-service-admin-api-key" "nodeIndexName": "your-node-index-name" "edgeIndexName": "your-edge-index-name" Supported index options with Azure search:

url (required): URL of the search serviceapiKey (required): Primary Admin Key of the search servicenodeIndexName (required): Name of the node index of your graph databaseedgeIndexName (optional): Name of the edge index of your graph databasePlease refer to the Azure search online documentation for details on how to load data into Azure search.

Note that today, in Azure Search, it's not possible to search on numbers or dates. If you are interested in this feature, please get in touch.

Elasticsearch is supported from version 6.x using the elasticSearch connector.

Linkurious Enterprise ships with an embedded Elasticsearch server (version 7.10.2).

ATTENTION: The internal Elasticsearch is not intended to be used for graph databases > 50,000,000 nodes. Though indexation and search performance are ultimately dependent on hardware limitations, it has been configured to prevent horizontal scaling and so is not an efficient choice for large DBs. It is meant instead as a quick indexation strategy for POCs or small deployments.

To use the Linkurious Enterprise embedded Elasticsearch instance, set the following index configurations keys:

vendor must be elasticSearchhost must be "127.0.0.1"port must be 9201Example configuration:

"dataSources": "graph": "vendor": "neo4j" "url": "http://127.0.0.1:7474" "index": "vendor": "elasticSearch" "host": "127.0.0.1" "port": 9201 Search connector elasticSearch supports the following options:

host (required): Elasticsearch server hostport (required): Elasticsearch server port (standard is 9200)https: true to connect to Elasticsearch via HTTPSuser: Elasticsearch username (if you are using X-Pack Security, a.k.a ElasticShield)password: Elasticsearch passwordforceReindex: true to re-index the data-source each time Linkurious Enterprise startsskipEdgeIndexation: true to skip edges indexation (edges won't be searchable)analyzer (default: "standard"): The custom analyzer aimed at analyzing a specific language text. (see available language analysers)Example configuration:

"dataSources": "graph": "vendor": "neo4j" "url": "http://127.0.0.1:7474" "index": "vendor": "elasticSearch" "host": "192.168.1.122" "port": 9200 "skipEdgeIndexation": true Please check here how to configure search on numerical and date properties.

By default, Linkurious Enterprise ships with an embedded Elasticsearch instance which works out-of-the-box by default. The embedded Elasticsearch instance will work well for average to large database sizes, but for search-heavy use-cases or very large databases, configuring your own Elasticsearch cluster might be necessary.

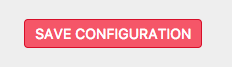

An easy way to deploy an easy-to-scale Elasticsearch cluster yourself is to use Amazon Web Services (AWS).

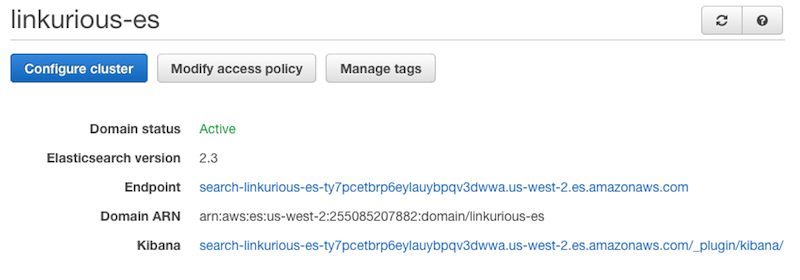

Please follow these steps to create a configure your AWS Elasticsearch cluster with Linkurious Enterprise:

Visit the Amazon Web Services website and create your account (or log in if you already have one).

Visit the Amazon Elasticsearch Service page, log-in and follow the steps to create an Elasticsearch cluster:

Select Services > Elasticsearch Service

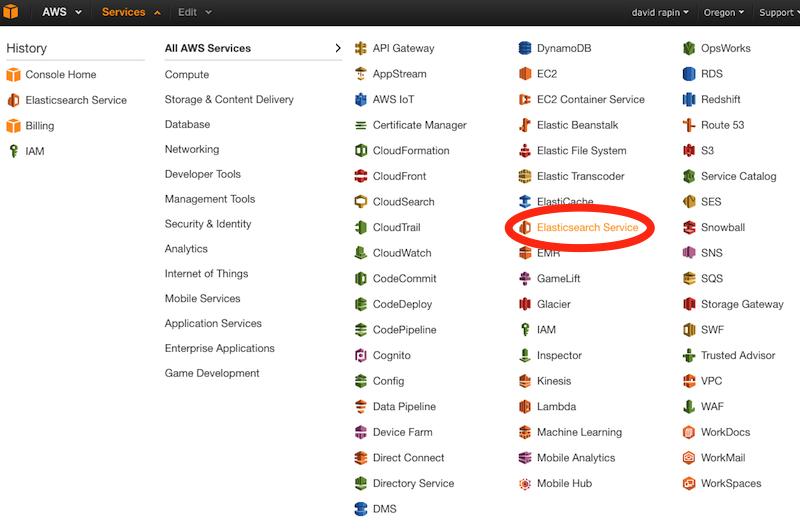

Click get started

Name your cluster (1) and select the Elasticsearch version 2.x (2), click Next

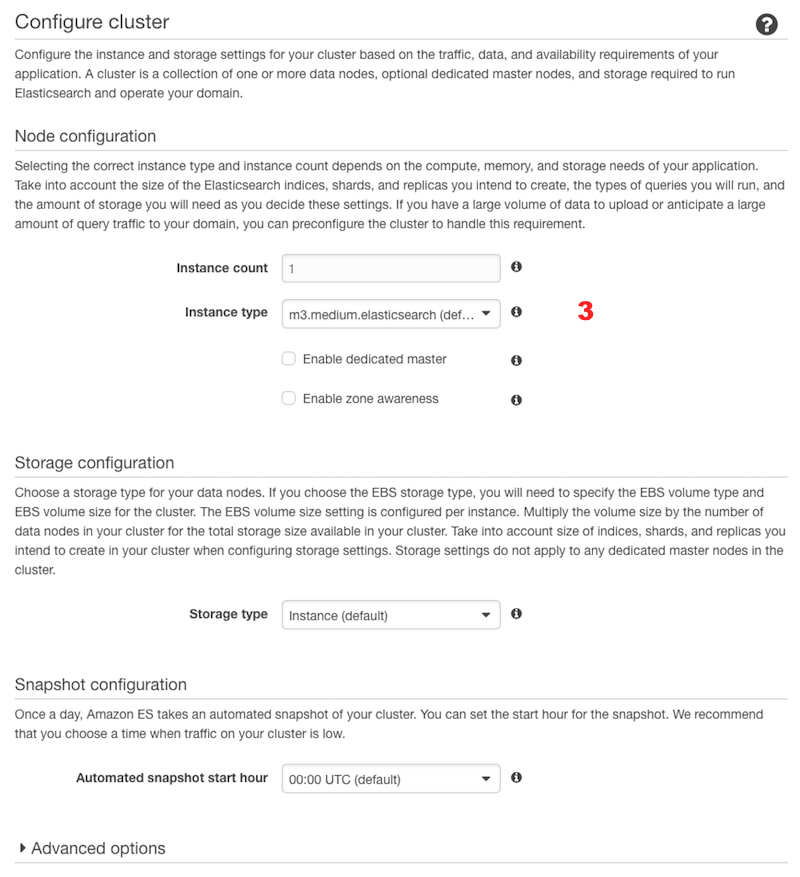

Select the instance type, the number of instances and the number of dedicated masters in your cluster (3), depending on your database's size

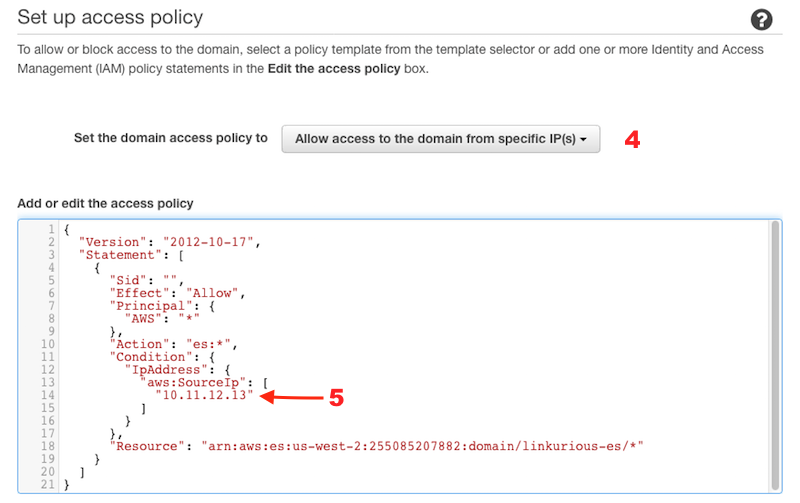

Configure the access policy for your cluster. Use access from specific IP (4) and enter the public IP address of your Linkurious Enterprise server (5)

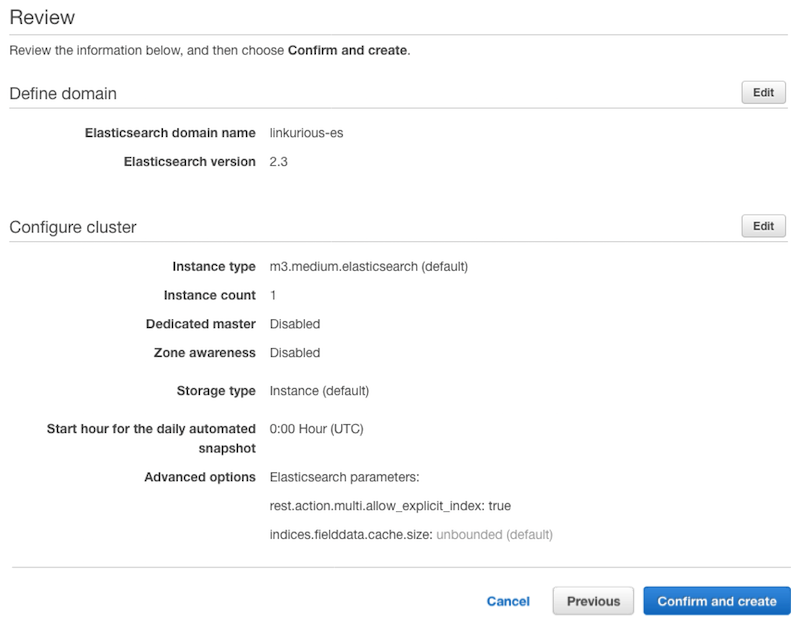

Review your configuration and confirm the creation of the cluster

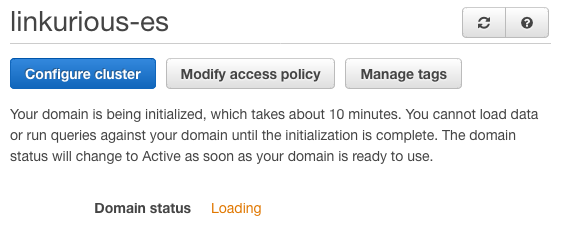

Wait until the cluster is deployed (usually less than an hour)

When your cluster is deployed, copy the Endpoint host name

Configure Elasticsearch as explained here

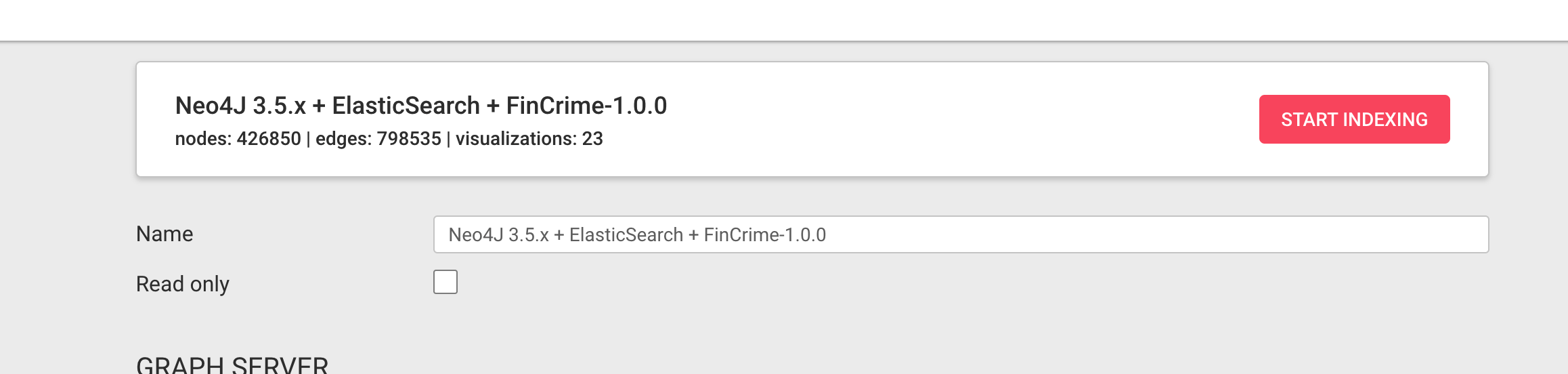

Incremental indexing allows you to keep in sync your Elasticsearch index and your Neo4j graph database.

Linkurious Enterprise will index at a regular interval new and updated items from your database. This way, you avoid a complete reindex every time you update you data.

This is achieved by keeping track of a timestamp on every node and edge in the database, hereby allowing the indexer to only consider the nodes with newer timestamps and consequently reducing the indexing time.

You should consider this option if your database holds a significant number of items and needs to be updated frequently.

1. APOC

Linkurious Enterprise relies on APOC triggers to ensure that every node and edge created or updated has a timestamp.

You need to make sure that you have installed APOC correctly and enabled APOC triggers. You can find all the information you need to install APOC from Neo4j documentation.

You can quickly verify that you have installed everything correctly by executing the following command from your Neo4j browser:

CALL apoc.trigger.list()2. A property name

Your need to carefully choose the property that will hold the timestamp on every node/edge of your database. The consequence of this choice is that Linkurious Enterprise will create triggers that will store a timestamp on this property for all new and updated nodes/edge.

This means that any information stored on that property will be overwritten by the trigger

After you have installed and configured APOC, you can enable incremental indexing from the data-source configuration page or by editing the configuration file with the following options:

incrementalIndexation You can enable incremental indexing by switching this option to true.timestampPropertyName You must then provide the timestamp property name to keep track of during

incremental indexing. E.g: “lastEditTimestamp”.

After you have enabled incremental indexing for the first time, Linkurious Enterprise requires to perform a complete re-indexing of the data-source to ensure that every item has been indexed. You simply need to click on the "Start indexing" button to complete the configuration.

Linkurious Enterprise will index your data-source incrementally from that point forward using the timestamps generated by the APOC triggers.

Once you have set up the incremental indexation, you can configure the frequency at which it will be triggered. You can customize this schedule by adding a cron expression to your Elasticsearch configuration.

You can make this change from the configuration file located at linkurious/data/config/production.json under datasources.index for each dataSource.

By default, we have set all incremental indexing to be launched every Sunday at 12PM, but you can change it to the frequency that most suits your needs. We advise that you schedule your increments to run after you have updated your database with new information. Here are some examples of cron expressions:

"incrementalIndexationCron": "*/5 * * * *""incrementalIndexationCron": "*/30 * * * *""incrementalIndexationCron": "0 * * * *""incrementalIndexationCron": "00 15 * * *""incrementalIndexationCron": "00 00 1,15 * *""incrementalIndexationCron": "00 00 1 * *"If you need to index your data-source on a non regular interval, you can trigger it manually with the “Start indexation” button in the data-source configuration page or trigger it automatically with the start indexation api.

You can disable automatic indexing with the following configuration: "incrementalIndexationCron": "none"

Here some important considerations when choosing incremental indexing.

Incremental indexing is only available on Neo4j v3.5.1 and above.

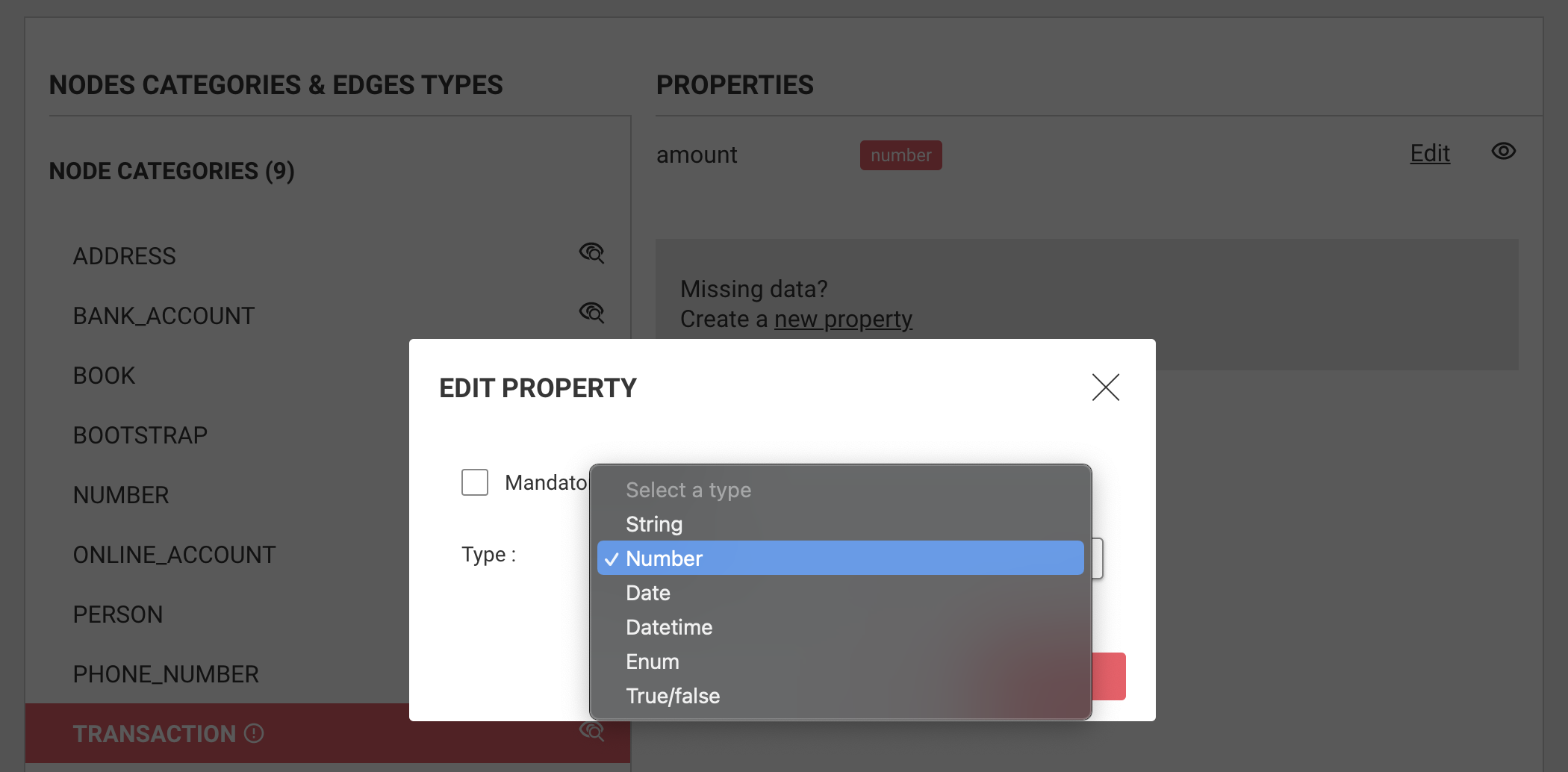

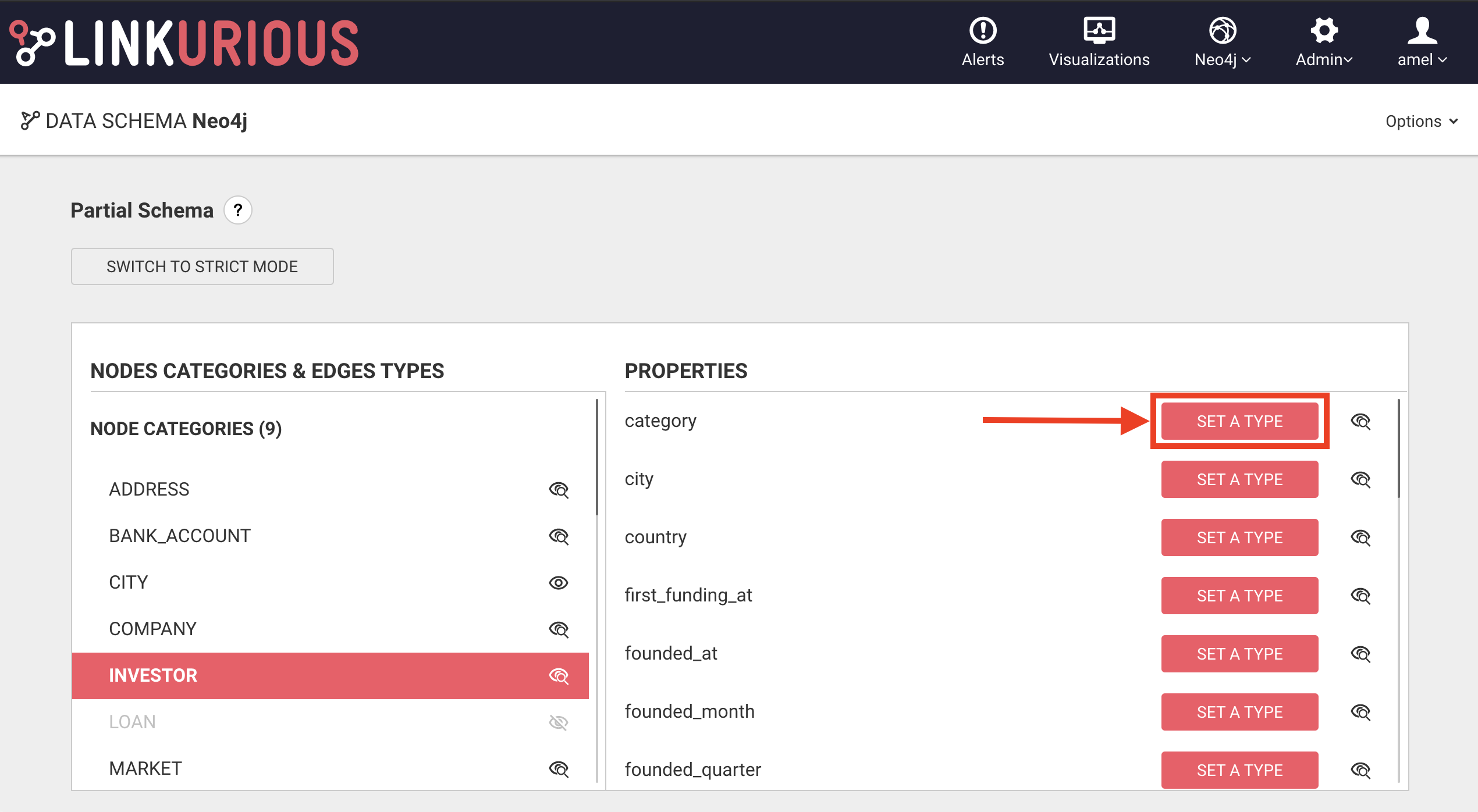

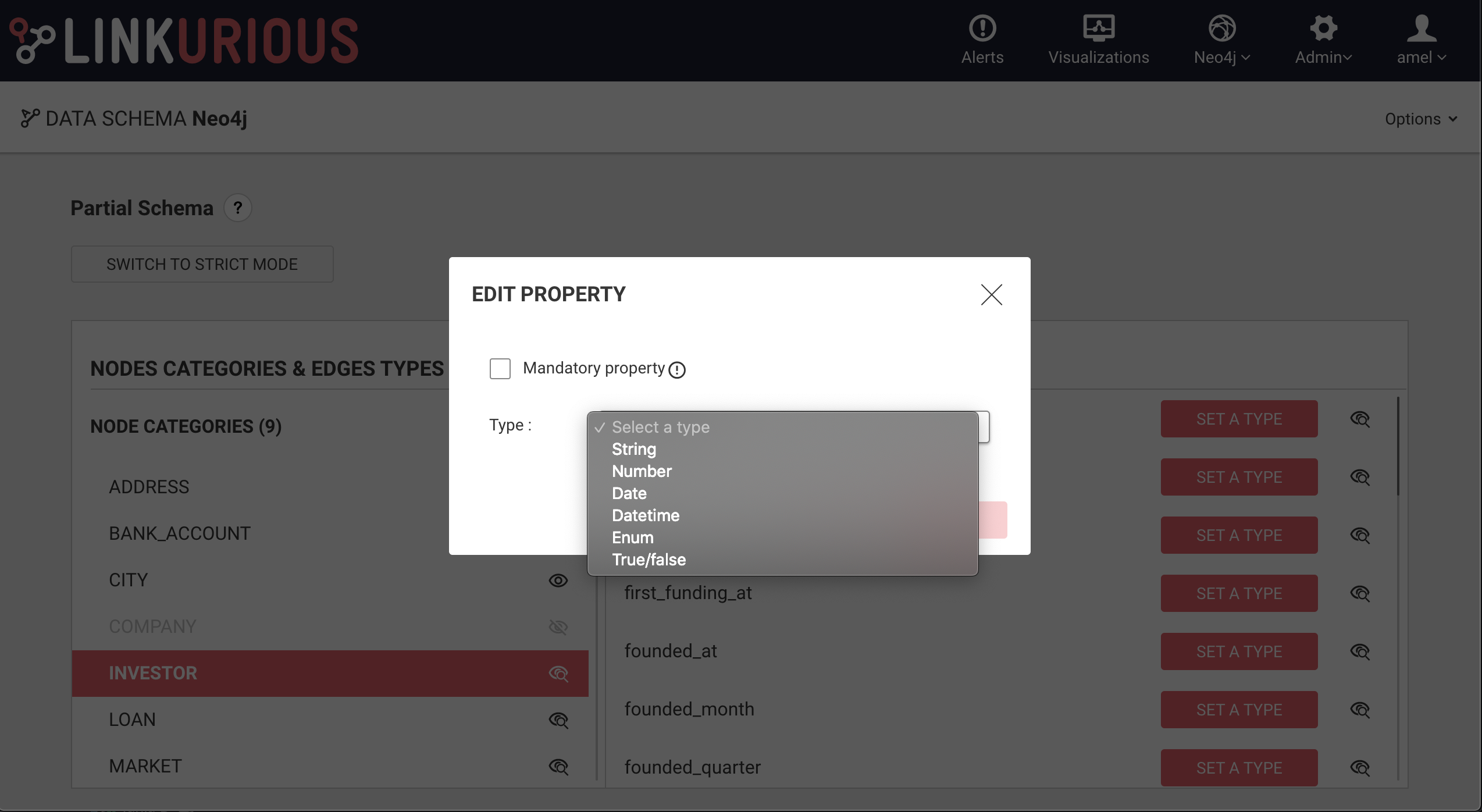

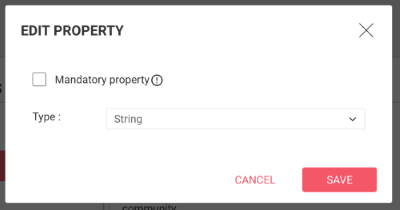

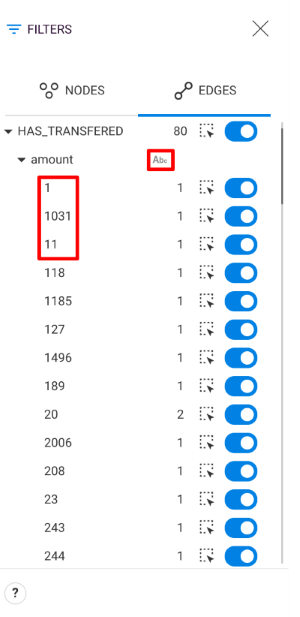

Elasticsearch is required to be able to perform numerical and date search.

To properly configure Elasticsearch for number and date search please follow these steps:

Note that if you change the types after the indexing, you will need to re-index for the changes to apply.

Linkurious Enterprise uses an SQL database to store user-data. The database contains:

By default, the user-data store is a SQLite file-based database. This makes Linkurious Enterprise easy to deploy.

To see the list of database providers and versions supported by Linkurious Enterprise, please view the compatibility matrix.

For deployment at scale (more than a couple users), we recommend switching to one of the supported server-based databases:

MySQL 8 is not yet supported in Linkurious Enterprise 3.0.14.

In order to get started with Linkurious Enterprise, there are few requirements for user-data store to function properly.

Linkurious Enterprise provides many options which can be used to configure user-data store connection. Below, you can find the configuration documentation and configuration examples for popular DBMS solutions.

In the Linkurious Enterprise configuration file, it is possible to configure the database connection under db key.

name (default: "linkurious"): Database name for Linkurious Enterprise to use.username (optional): Username for the database userpassword (optional): Password for the database useroptions: Child object that contains connection options

dialect (default: "sqlite"): The database dialect to be used. Supports: "sqlite", "mysql", "mariadb", "mssql"host: Host address of the databaseport: Port address of the databasestorage (only for "sqlite"): Storage location for the database filedialectOptions (optional): Child object that contains dialect specific additional options

ssl (default: false, boolean): Whether to use SSL for database connectionencrypt (default: false, boolean): Whether to enable encryption (useful for cloud instances with encryption enabled by default)multiSubnetFailover (default: false, boolean): Whether the driver attempts parallel connections to the failover IP addresses during a multi-subnet failover for higher availability.SQLite if the default user-data store of Linkurious Enterprise.

"db": "name": "linkurious" "options": "dialect": "sqlite" "storage": "server/database.sqlite" "db": "name": "linkurious" "username": "MYSQL_USER_NAME" "password": "MYSQL_PASSWORD" "options": "dialect": "mysql" "host": "MYSQL_HOST" "port": 3306 "db": "name": "linkurious" "username": "MSSQL_USER_NAME" "password": "MSSQL_PASSWORD" "options": "dialect": "mssql" "host": "MSSQL_HOST" "port": 1433 "db": "name": "linkurious" "username": "MARIADB_USER_NAME" "password": "MARIADB_PASSWORD" "options": "dialect": "mariadb" "host": "MARIADB_HOST" "port": 3306 The default storage system for Linkurious Enterprise is SQLite.

Configuring Linkurious Enterprise to work with MySQL is a really easy procedure if you don't need to migrate data from SQLite.

Migrating data from SQLite to an external database is possible but it is a procedure we would recommend only if restarting from scratch with the new configuration is not a viable option.

Our public resources contain a specific tool needed to perform the migration from SQLite to one of the supported databases, as well as the detailed list of steps to use and eventually configure the tool.

If you need help with the procedure please contact us at support@linkurio.us.

If you are using the SQLite database (by default), you only need to follow the standard Linkurious Enterprise backup procedure.

If you are using another database to store the Linkurious Enterprise user-data, please refer to one of the following guides:

The web server of Linkurious Enterprise delivers the application to end users through HTTP/S.

It is configured in the server configuration key within the configuration

file (linkurious/data/config/production.json):

Within the server key:

listenPort (default: 3000): The port of the web serverSome firewalls block network traffic ports other than 80 (HTTP).

Since only root users can listen on ports lower than 1024,

you may want reroute traffic from 80 to 3000 as follows:

sudo iptables -t nat -A PREROUTING -p tcp --dport 80 -j REDIRECT --to-port 3000If you use SSL, you can add a second rule to redirect 3443 to 443:

sudo iptables -t nat -A PREROUTING -p tcp --dport 443 -j REDIRECT --to-port 3443Within the server key:

baseFolder (default: /): The base path where Linkurious Enterprise will be foundIn some cases, you may want to host Linkurious Enterprise on a path other than root for a particular domain.

For example, if you want Linkurious Enterprise to be reachable at http(s)://HOST:PORT/linkurious you should

set baseFolder equal to linkurious.

Within the server key:

domain (default: "localhost"): The domain or sub-domain used to access the web server.

It is mandatory to edit it for publishing visualizations online.

It is also used to restrict the validity of cookies to a domain or sub-domain.publicPortHttp (default: listenPort): The public HTTP port of the web server.publicPortHttps (default: listenPortHttps): The public HTTPS port of the web server.In some cases, Linkurious Enterprise needs to generate links to itself (for example when generating a link to a widget). For that, the server needs to know its public domain and port to generate those links.

The public port can be different from the actual port if you use traffic rerouting

(using a firewall or a reverse-proxy). In the example above (traffic rerouting),

the actual HTTP port (listenPort) is 3000, but the public HTTP port (publicPortHttp)

is 80.

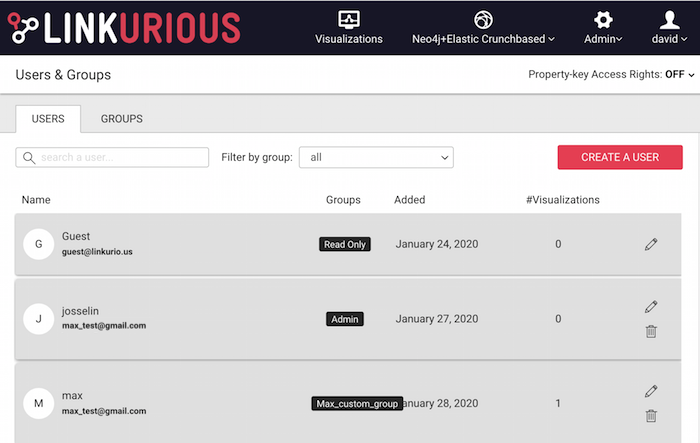

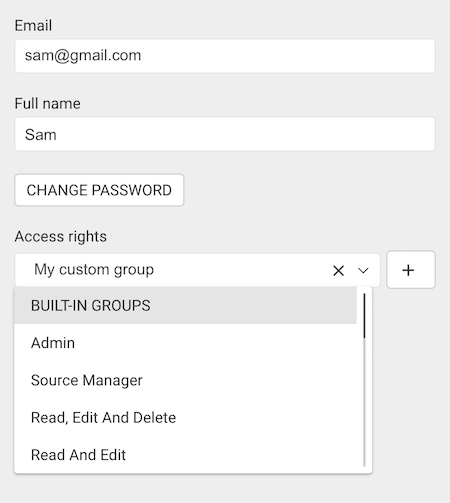

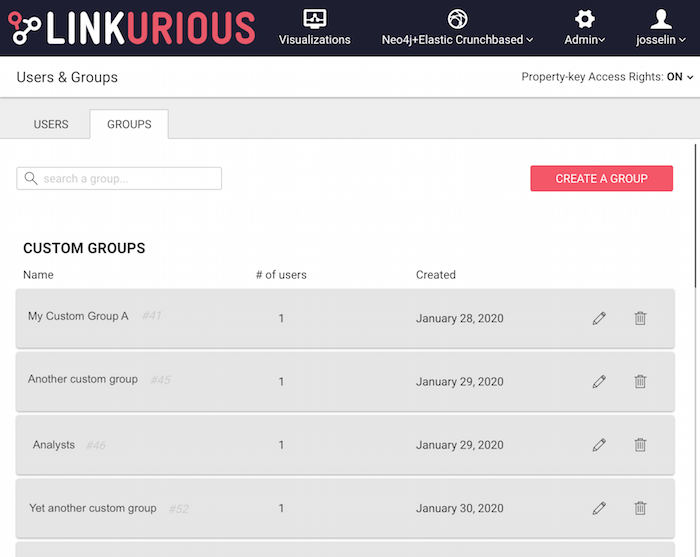

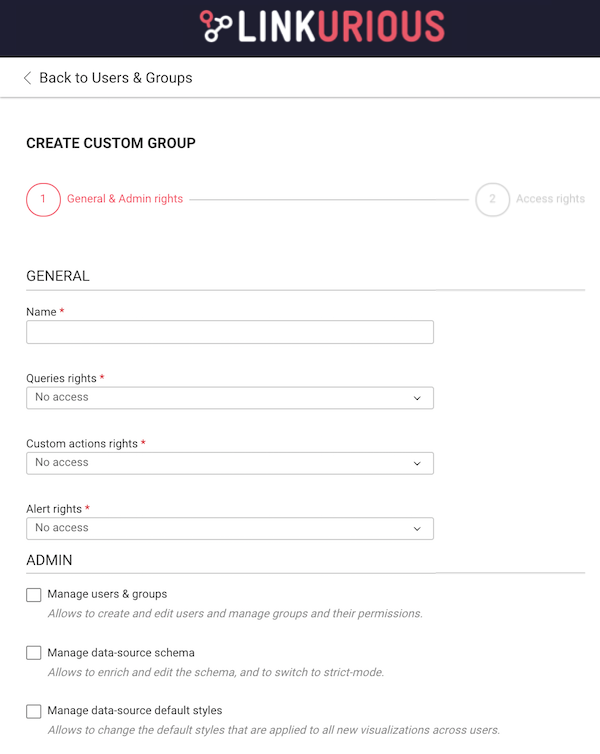

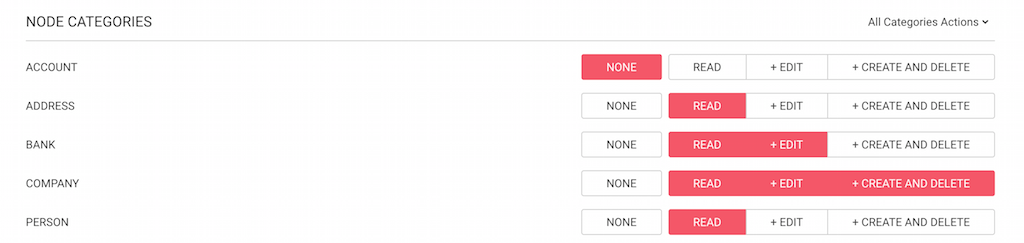

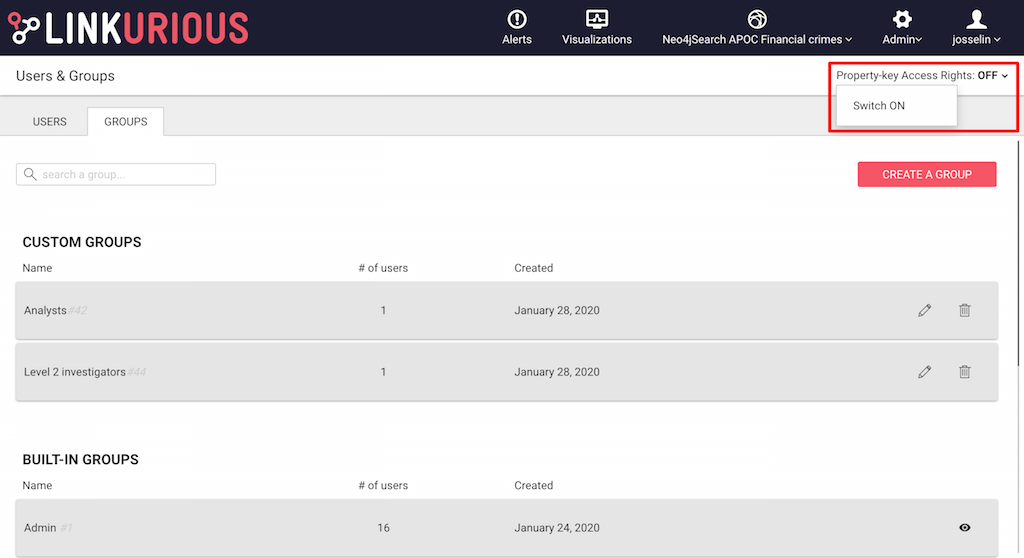

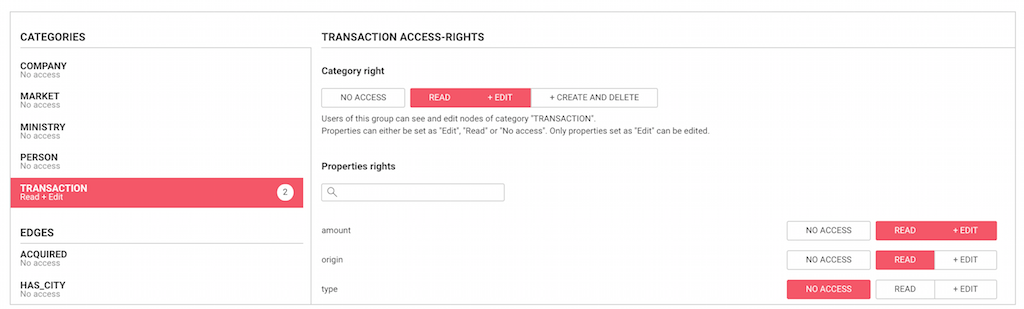

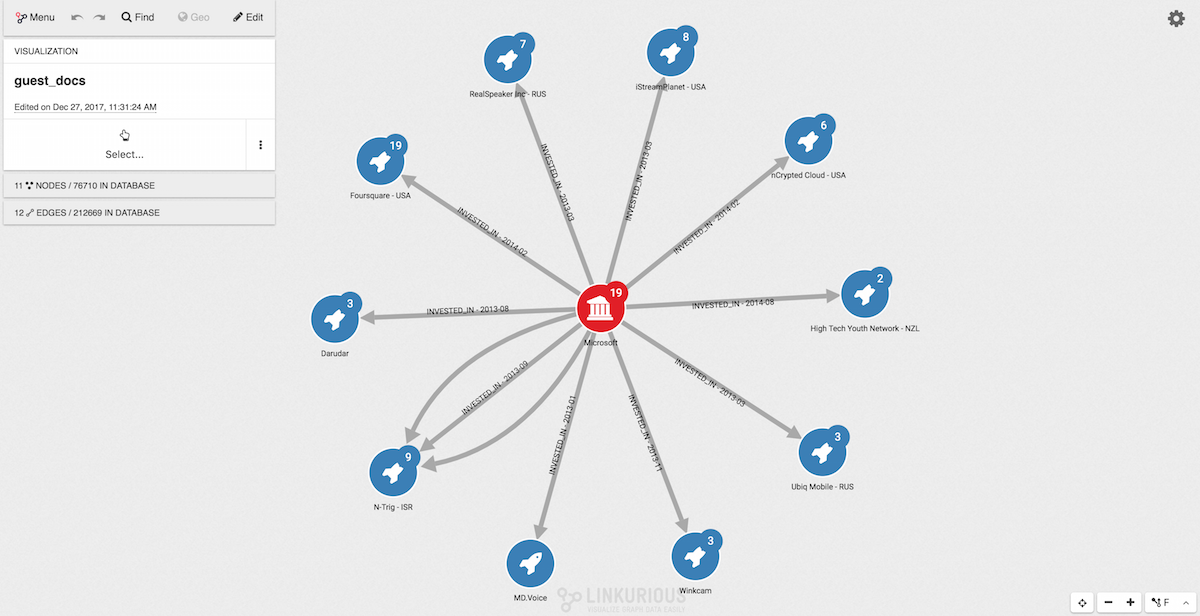

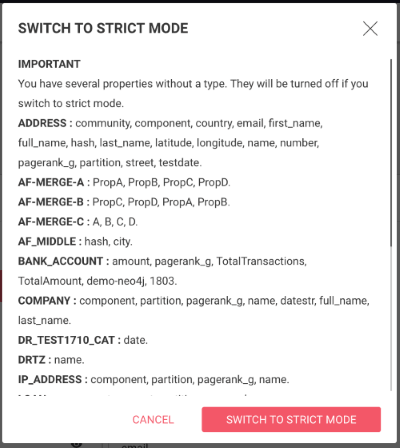

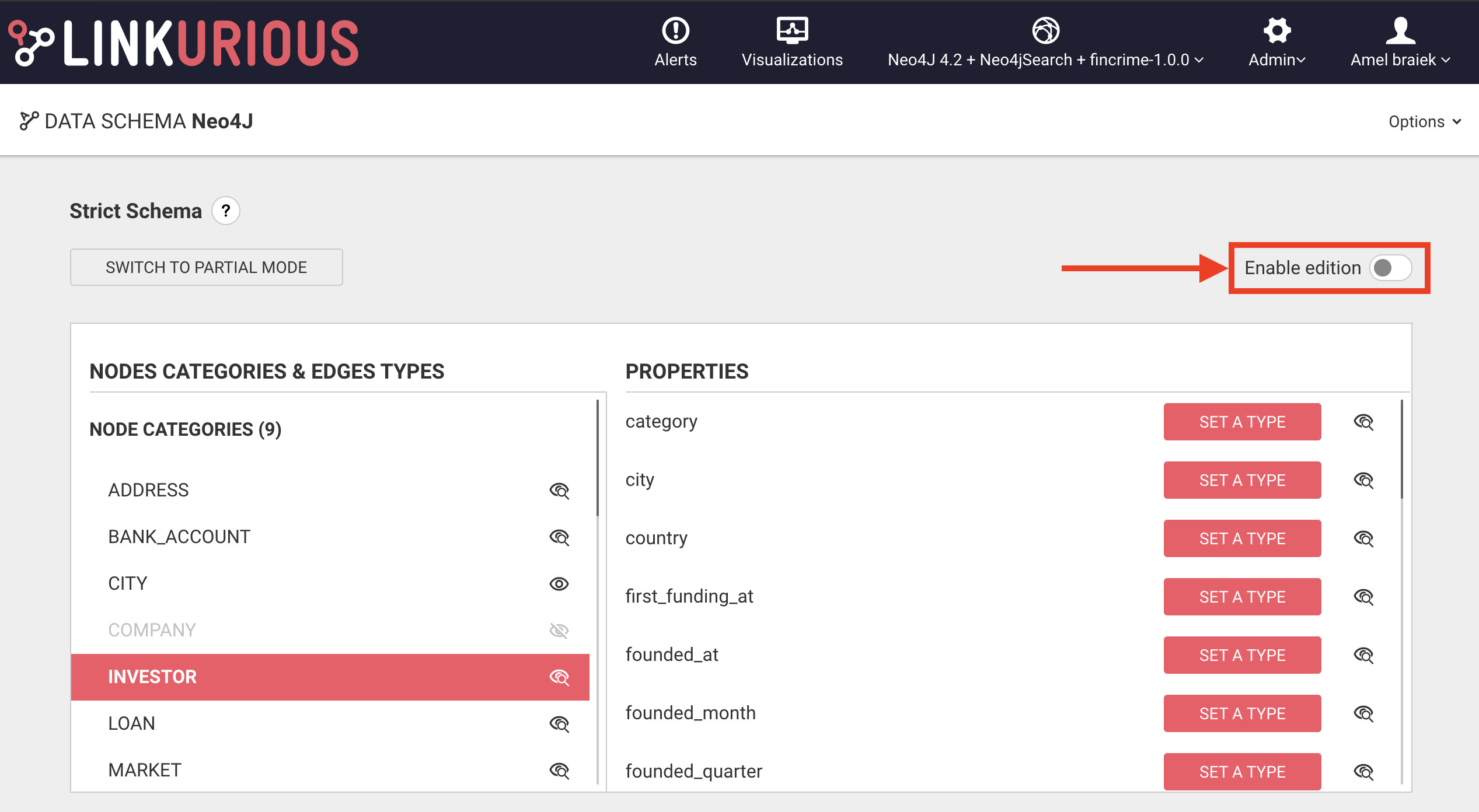

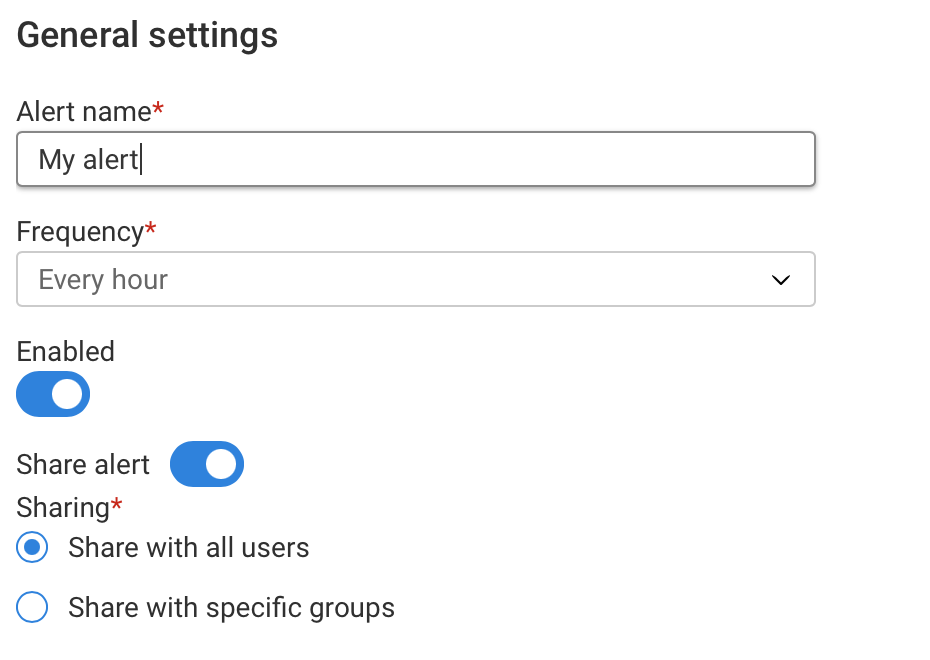

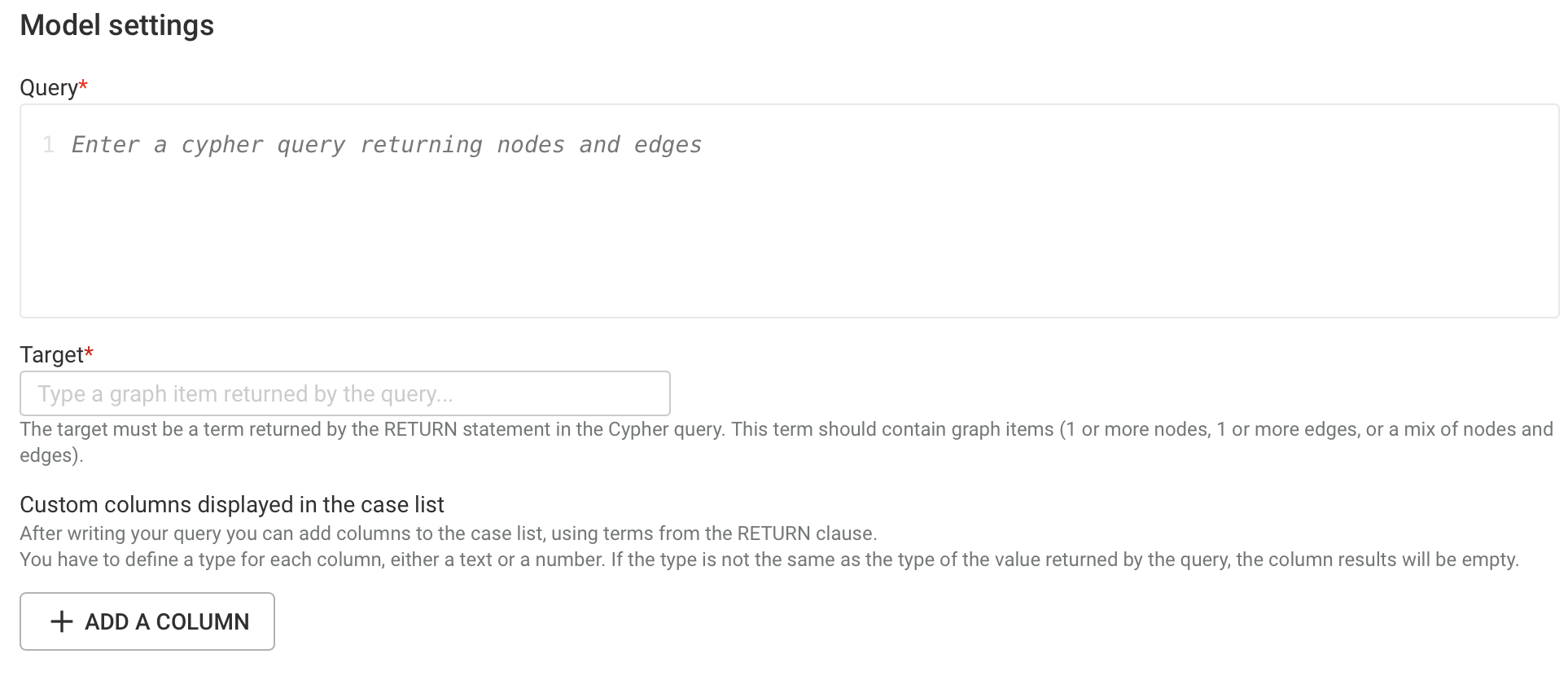

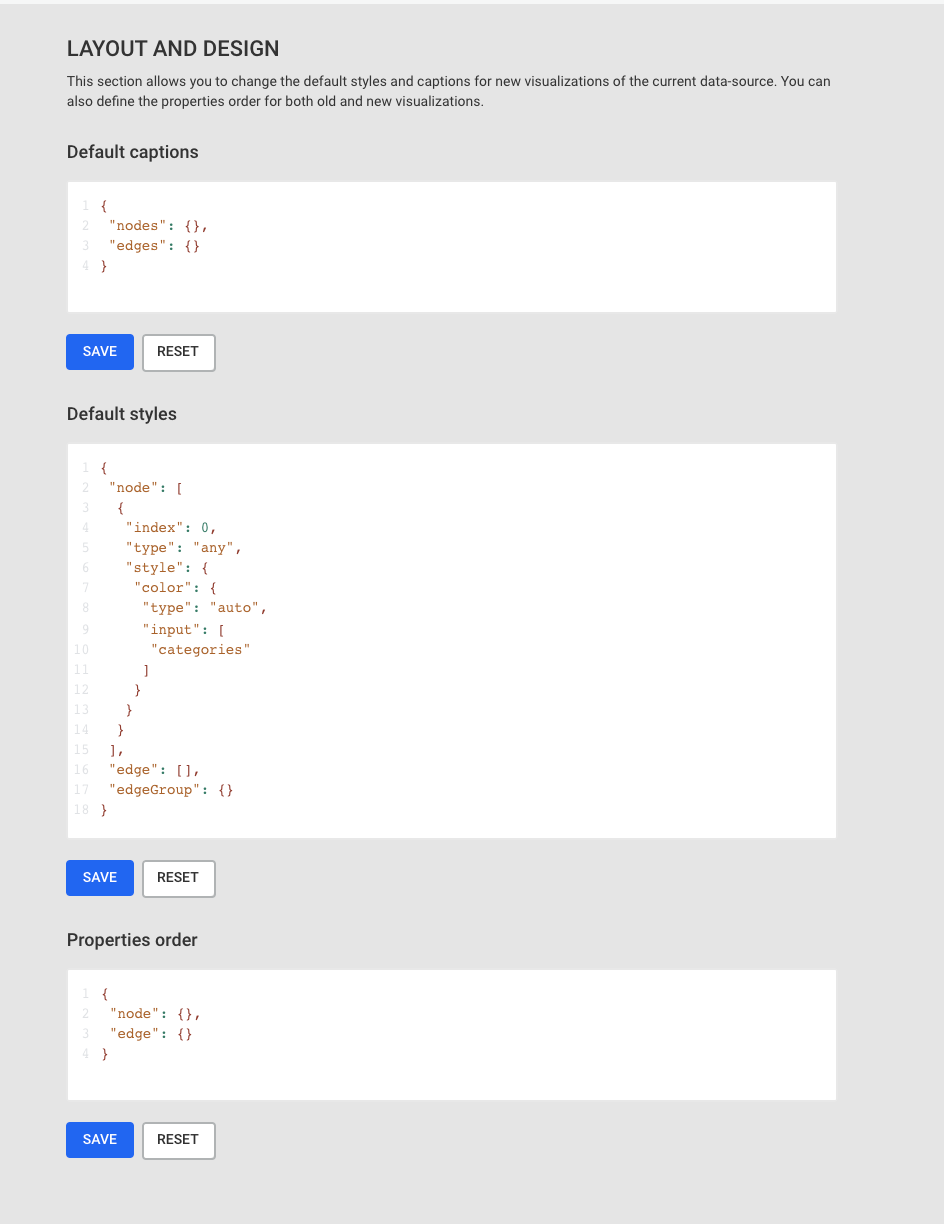

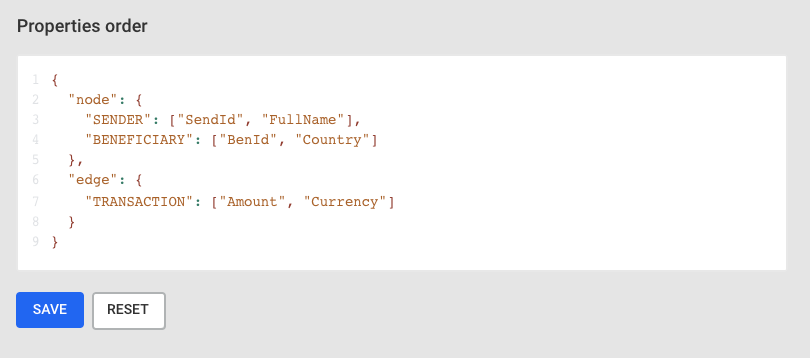

Within the server key: